Chapter 5 Regression Predictive Models

In this chapter, we consider predictive models built from ordinary least squares models. Even in this case, that we have been thinking about since Chapter 1, there are new things to think about when focusing on predictive models. Our primary goal is variable selection for the purposes of prediction. However, sometimes, it may be more efficient to combine predictors into new predictors, rather than using the predictors as given. This process will also be lumped under the general term of “variable selection” for lack of a better descriptor.

5.1 Preprocessing

First, we talk about preprocessing the predictors. A common technique is to center and scale the predictors, so that all of the predictors are on a common scale. Some techniques that we will see in the next chapter require the predictors to be on a similar scale, while there is not usually a down side in terms of predictive power for this in a regression model. However, it can be harder to interpret the coefficients of ordinary least squares regression, since the predictors are no longer in their original scales.

Let’s look at the insurance data set from the previous chapter to see how this could be done via R.

## age sex bmi children smoker

## Min. :18.00 female:662 Min. :16.00 Min. :0.000 no :1064

## 1st Qu.:27.00 male :676 1st Qu.:26.30 1st Qu.:0.000 yes: 274

## Median :39.00 Median :30.40 Median :1.000

## Mean :39.21 Mean :30.67 Mean :1.095

## 3rd Qu.:51.00 3rd Qu.:34.70 3rd Qu.:2.000

## Max. :64.00 Max. :53.10 Max. :5.000

## region expenses

## northeast:324 Min. : 1122

## northwest:325 1st Qu.: 4740

## southeast:364 Median : 9382

## southwest:325 Mean :13270

## 3rd Qu.:16640

## Max. :63770##

## Call:

## lm(formula = expenses ~ ., data = dd)

##

## Residuals:

## Min 1Q Median 3Q Max

## -11302.7 -2850.9 -979.6 1383.9 29981.7

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -11941.6 987.8 -12.089 < 2e-16 ***

## age 256.8 11.9 21.586 < 2e-16 ***

## sexmale -131.3 332.9 -0.395 0.693255

## bmi 339.3 28.6 11.864 < 2e-16 ***

## children 475.7 137.8 3.452 0.000574 ***

## smokeryes 23847.5 413.1 57.723 < 2e-16 ***

## regionnorthwest -352.8 476.3 -0.741 0.458976

## regionsoutheast -1035.6 478.7 -2.163 0.030685 *

## regionsouthwest -959.3 477.9 -2.007 0.044921 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 6062 on 1329 degrees of freedom

## Multiple R-squared: 0.7509, Adjusted R-squared: 0.7494

## F-statistic: 500.9 on 8 and 1329 DF, p-value: < 2.2e-16We see that the numeric predictors are age and bmi, which we can center and scale by subtracting the mean and dividing by the standard deviation.

Compare the model with the scaled predictors to the one with the raw predictors above to see what changes.

##

## Call:

## lm(formula = expenses ~ ., data = dd)

##

## Residuals:

## Min 1Q Median 3Q Max

## -11302.7 -2850.9 -979.6 1383.9 29981.7

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 8532.8 410.9 20.764 < 2e-16 ***

## age 3608.6 167.2 21.586 < 2e-16 ***

## sexmale -131.4 332.9 -0.395 0.693255

## bmi 2069.1 174.4 11.864 < 2e-16 ***

## children 475.7 137.8 3.452 0.000574 ***

## smokeryes 23847.5 413.1 57.723 < 2e-16 ***

## regionnorthwest -352.8 476.3 -0.741 0.458976

## regionsoutheast -1035.6 478.7 -2.163 0.030685 *

## regionsouthwest -959.3 477.9 -2.007 0.044921 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 6062 on 1329 degrees of freedom

## Multiple R-squared: 0.7509, Adjusted R-squared: 0.7494

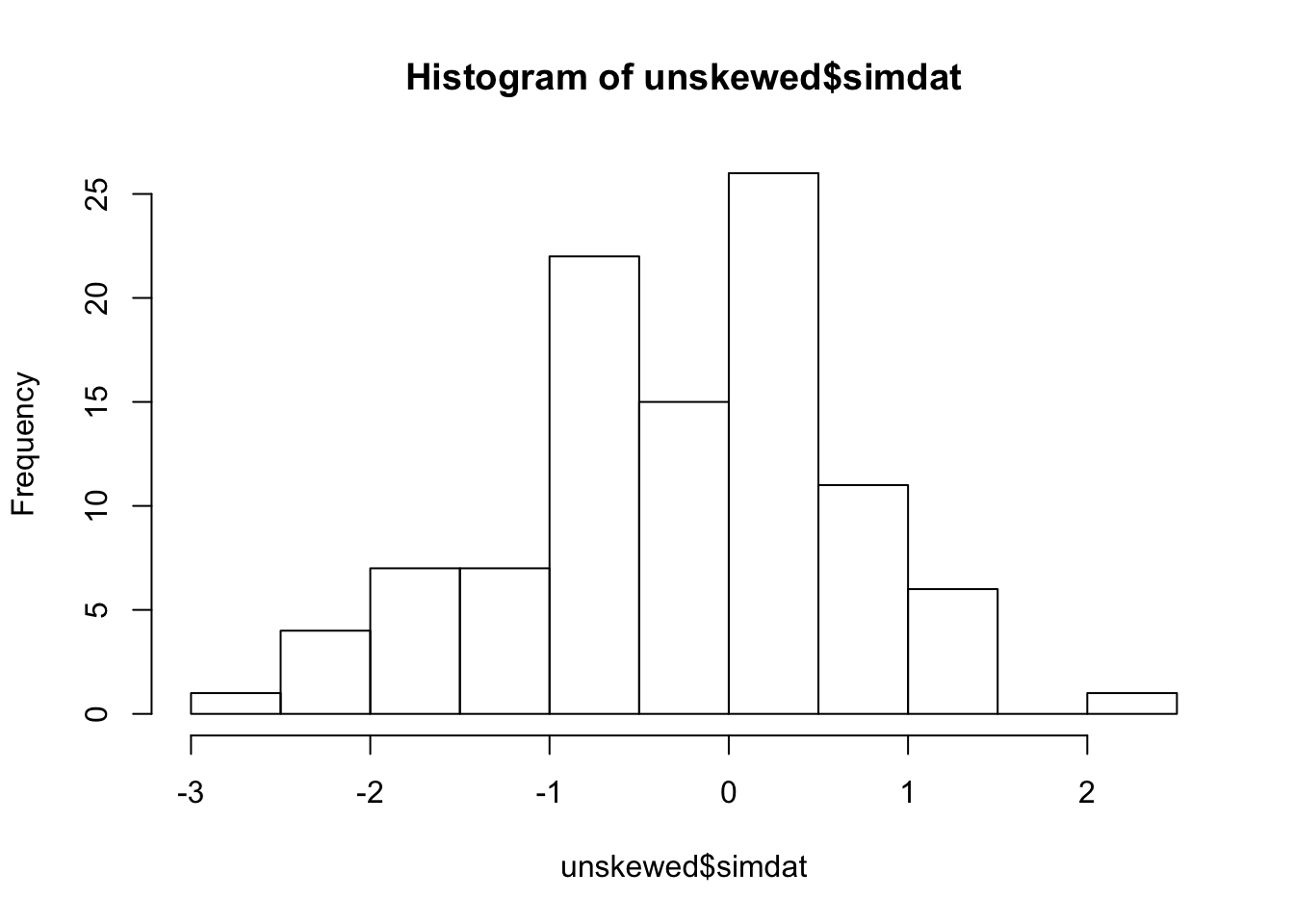

## F-statistic: 500.9 on 8 and 1329 DF, p-value: < 2.2e-16Another technique is to deskew the predictors. Data that is very skew often has points that look like outliers, and those can be high leverage points for the model, meaning that they may overly impact the prediction of other values. Since values that are at the edge of the data range may follow a different pattern than values in the center of the data, this can adversely affect RMSE. As an example, suppose the true generative process of the data is \(y = \epsilon a x^b\), where \(\epsilon\) is a mean 1 random variable. If we take the logs of both sides, then we get \(\log y = \log a + b \log x + \log \epsilon\), which looks very much like our model for simple linear regression.

When trying to decide whether to deskew predictors, cross-validation can be useful. We can model the response both ways, and use the model with the lower RMSE. As always, we should be careful to avoid overfitting, and consider taking the simplest model that has a RMSE within one standard deviation of the lowest.

The process of de-skewing is done to positive variables via the Box-Cox transformation. The Box-Cox transform chooses a value for \(\lambda\) such that the transformed variable \[ x^* = \begin{cases} \frac{x^\lambda - 1}{\lambda} & \lambda\not= 0\\ \log(x)& \lambda = 0 \end{cases} \] We will not discuss the details of how \(\lambda\) is chosen, but the value that is chosen is one that deskews the variable of interest.

For example, suppose we have a random sample from an exponential random variable.

## [1] 1.60463We see that the data is moderately positively skewed. In order to apply the Box-Cox transform we need to put the data into a data frame.

Next, we use caret::preProcess to say what kind of processing we want to do to the data.

Finally, to get the new data frame, we “predict” the new values from the pre-processing model.

We can check that the new data has been unskewed:

## [1] -0.2006273

If we wanted to center, scale and unskew it, we could do the following.

pre_process_mod <- preProcess(df,

method = c("BoxCox", "center", "scale"))

processed_data <- predict(pre_process_mod, newdata = df)

mean(processed_data$simdat)## [1] 1.769472e-17## [1] 15.2 Missing Data

Missing data is a very common problem. We may have a data set with 2000 observations of 50 variables, but each observation is missing information in at least one of the variables. Let’s start by observing the default behaviour of lm when there is missing data.

We use the St Louis housing data set that is required for your project.

We see that this data set consists of 1201 observations of 29 variables. However, some of the variables consist entirely of missing data!

## Mode NA's

## logical 1201Let’s model price on year built and sold date, and see what happens.

As we can see, it throws an error. More generally, the default behavior of R is to remove any observation that contains a missing value for any of the variables.

##

## Call:

## lm(formula = PRICE ~ YEAR.BUILT, data = dd)

##

## Residuals:

## Min 1Q Median 3Q Max

## -828255 -353012 -212364 -20400 64280835

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -11027057 3900975 -2.827 0.00480 **

## YEAR.BUILT 5926 2009 2.950 0.00326 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 2225000 on 935 degrees of freedom

## (264 observations deleted due to missingness)

## Multiple R-squared: 0.009223, Adjusted R-squared: 0.008163

## F-statistic: 8.703 on 1 and 935 DF, p-value: 0.003255We see we have 935 degrees of freedom for the F-statistic, which we showed in the previous chapters should have \(n - 2\) degrees of freedom. We compute

## [1] 935and we see that R has kept the 937 observations that don’t have any missing values.

It is a bad idea to build a final model based only on observations that have no missing values. First, as we saw in the previous chapter, the accuracy of predictions tends to increase with the number of observations that we have. Second, it is possible (likely even) that the missing data is not just randomly missing, but the fact that the value of one variable is missing may have an impact on how the other variables are related to the response!

As an example, for the housing data set, if BEDS is missing, that may very well be because the property is not, in fact, a house, but rather a vacant lot. If we remove all observations that have missing values for BEDS, then we remove all information about vacant lots. That means that we are going to need to have some way of dealing with the missing data.

If many, many values of a variable are missing, it may be appropriate to just remove the entire variable. In the extreme case of SOLD.DATE, which is missing all of its observations, then we should definitely just remove the entire variable.

We can use mean/median imputation of the missing values. This means that, for continuous data, we replace all of the missing values with either the mean or the median of the values that are not missing. For categorical data, we could replace all of the missing values with the value that occurs the most frequently in the values that are not missing.

For categorical data, we could create a new level which codes missing data.

We can predict the missing values based on the values that we do have. For example, if ZIP is missing, we could predict the value of ZIP based on the other characteristics of the house under consideration. Then we could use that ZIP to build the model.

For now, we will focus on mean imputation; we leave the last technique for once we have learned a few more predictive modeling techniques.

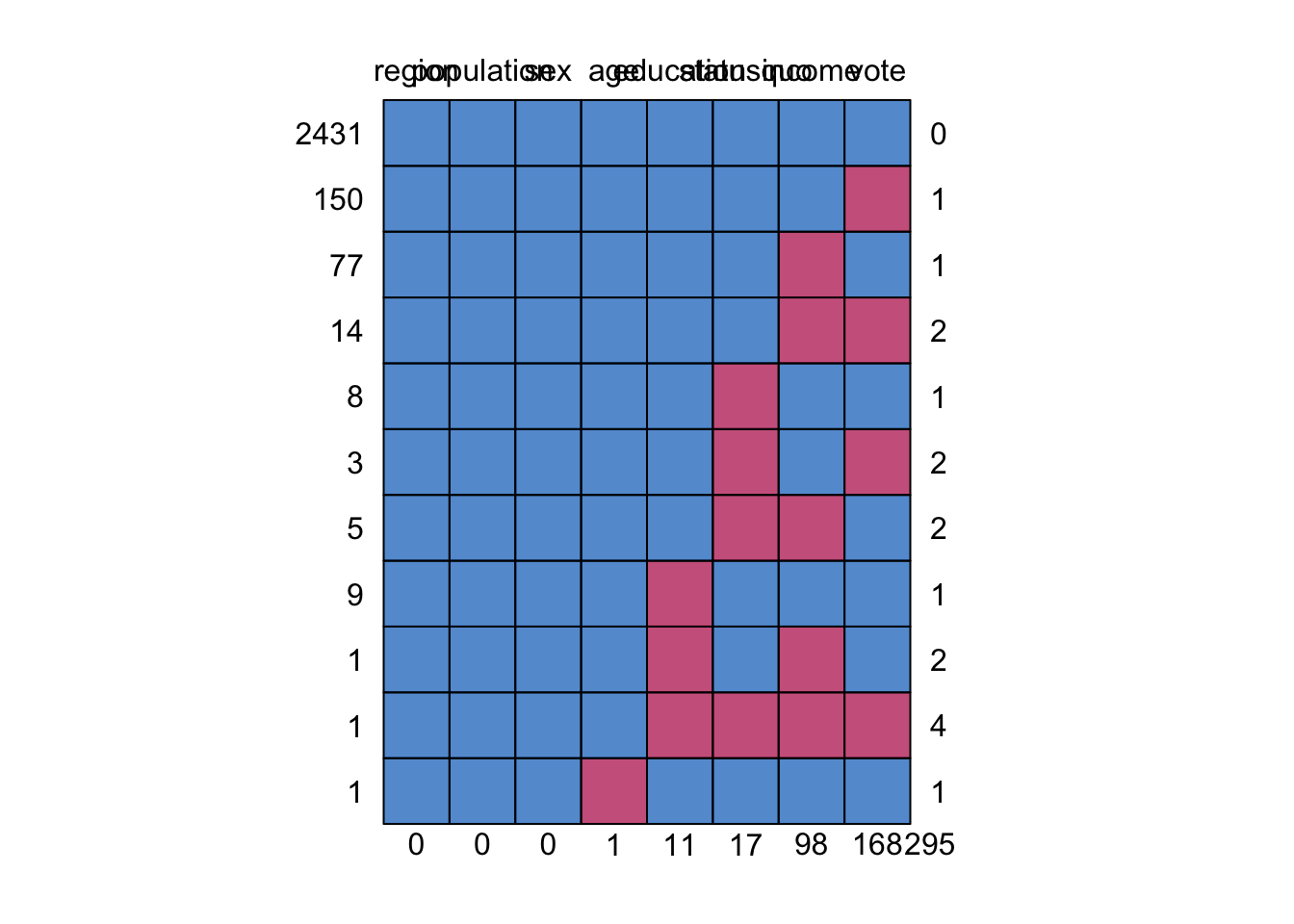

Here is how you can do mean imputation, using the mice package. The mice package does a lot more than just this! Let’s look at the carData::Chile data set. A first useful function in mice is md.pattern, which gives us information about how the missing values are organized.

##

## Attaching package: 'mice'## The following objects are masked from 'package:base':

##

## cbind, rbind

## region population sex age education statusquo income vote

## 2431 1 1 1 1 1 1 1 1 0

## 150 1 1 1 1 1 1 1 0 1

## 77 1 1 1 1 1 1 0 1 1

## 14 1 1 1 1 1 1 0 0 2

## 8 1 1 1 1 1 0 1 1 1

## 3 1 1 1 1 1 0 1 0 2

## 5 1 1 1 1 1 0 0 1 2

## 9 1 1 1 1 0 1 1 1 1

## 1 1 1 1 1 0 1 0 1 2

## 1 1 1 1 1 0 0 0 0 4

## 1 1 1 1 0 1 1 1 1 1

## 0 0 0 1 11 17 98 168 295We see that we have 2431 complete cases, 150 that are only missing vote, 77 that are only missing income and so on. The vast majority of missing data is in the income and vote column, and there is only one observation that is missing more than 2 values.

Unfortunately, we have to replace the missing values in the categorical variables by hand. The snazziest way (possibly) is using mutate_if and fct_explicit_na, which is shown below, but an easier way is to go through the categorical data one at a time, replacing NA with “(Missing)”.

library(tidyverse)

chile <- mutate(chile, vote = fct_explicit_na(f = vote),

sex = fct_explicit_na(f = sex),

education = fct_explicit_na(f = education),

region = fct_explicit_na(f = region))Note that fct_explicit_na has an argument na_level that can also be used to change NA to a value that is a current level. You simply use

and this would change all of the NA values in education to be P.

Of course, any time you find yourself writing the same code over and over again, there is likely a way to automate it. We can do that using mutate_if as below.

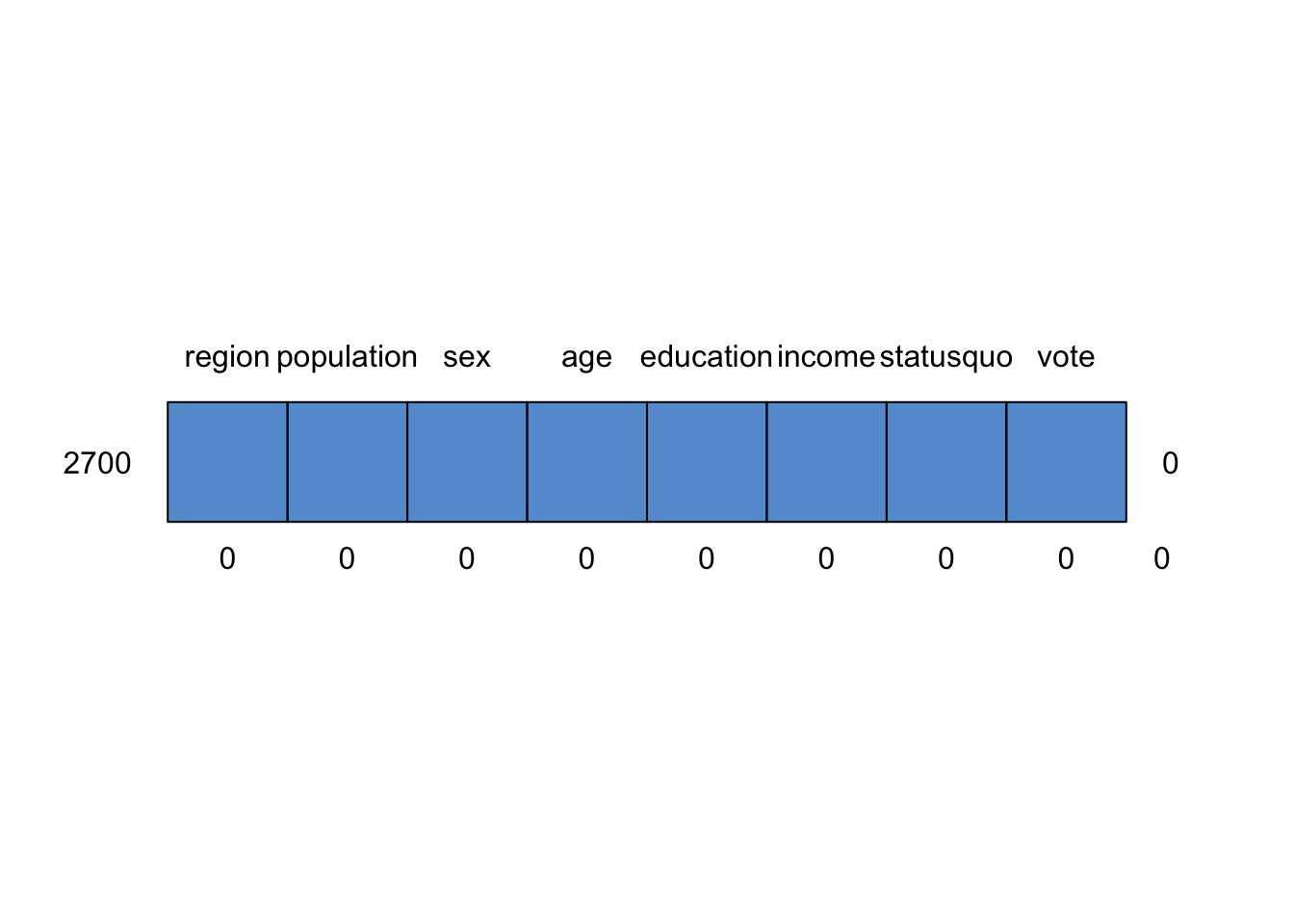

OK, now we only have two more observations with missing values! Let’s see how mice can be used to replace those with the mean.

##

## iter imp variable

## 1 1 age income statusquo## /\ /\

## { `---' }

## { O O }

## ==> V <== No need for mice. This data set is completely observed.

## \ \|/ /

## `-----'

## region population sex age education income statusquo vote

## 2700 1 1 1 1 1 1 1 1 0

## 0 0 0 0 0 0 0 0 0Yay! We have successfully replaced all of the missing values! Later in the book, we will see that this is just a first step, and should by no means be the last thing you do with missing data. It is a really tricky problem.

Exercise 5.1 Consider the housing data set in the class kaggle competition, which is available here.

Perform mean imputation for the numerical data.

- For categorical data, determine whether you can provide reasonable values for the missing data. If so, do it. If not, then replace the missing values with a new level, “(Missing)”.

5.2.1 Predicting when a level is missing from the training set

It can also happen that when you wish to predict a value, an observation is not missing, but it is also not found in the training set. For example, suppose you are trying to predict the PRICE of a house. You decide to model it on square footage and zip code. You build your model, but when you go to predict, there is a house in a zip code that you don’t have any data for. While this data isn’t missing, it causes similar problems to missing data in that we can’t predict a PRICE with that value of zip code.

The remedy for this is similar to the remedy for missing data. We should try to predict the value of the zip code based on other charactersitics of the house. For example, we could look at the lat/long information and pick the zip code that is the closest geographically. More sophisticated would be to predict the zip code using methods that we will cover later in the course. As a last resort, we could reassign the novel zip code in the test set to the most common value in the train set na_level option in fct_explicit_na, or to (Missing) using the if it exists in the train set.

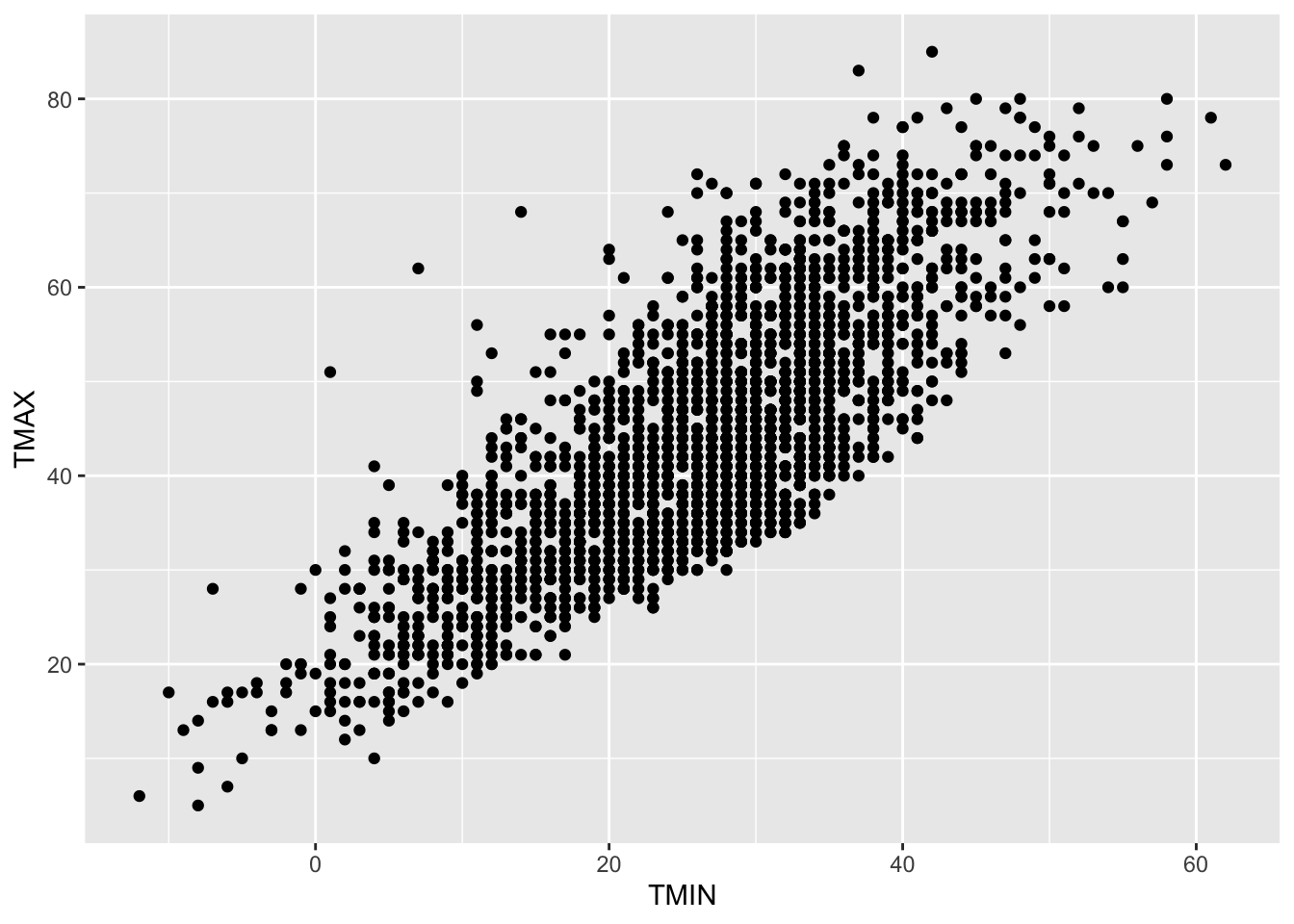

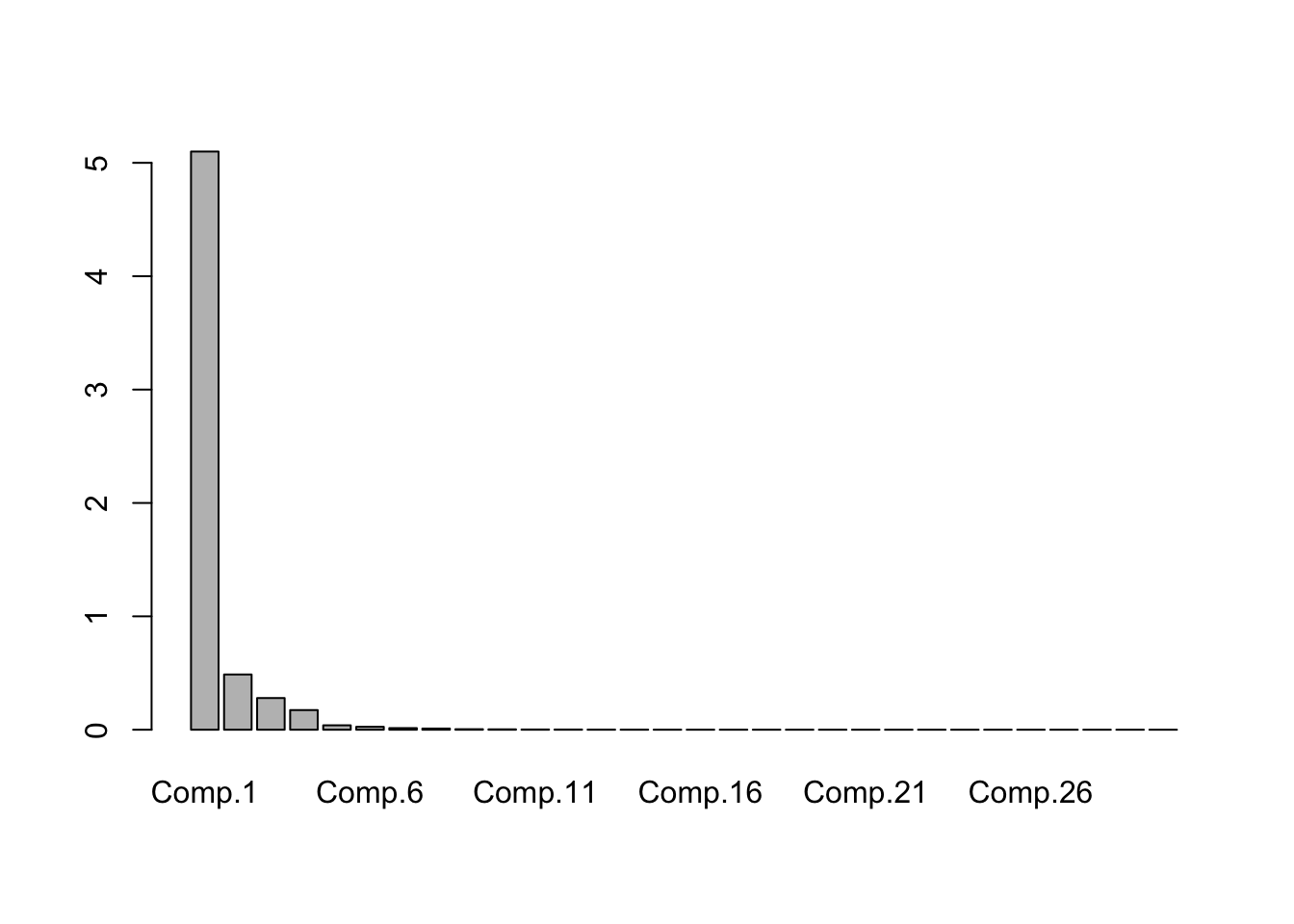

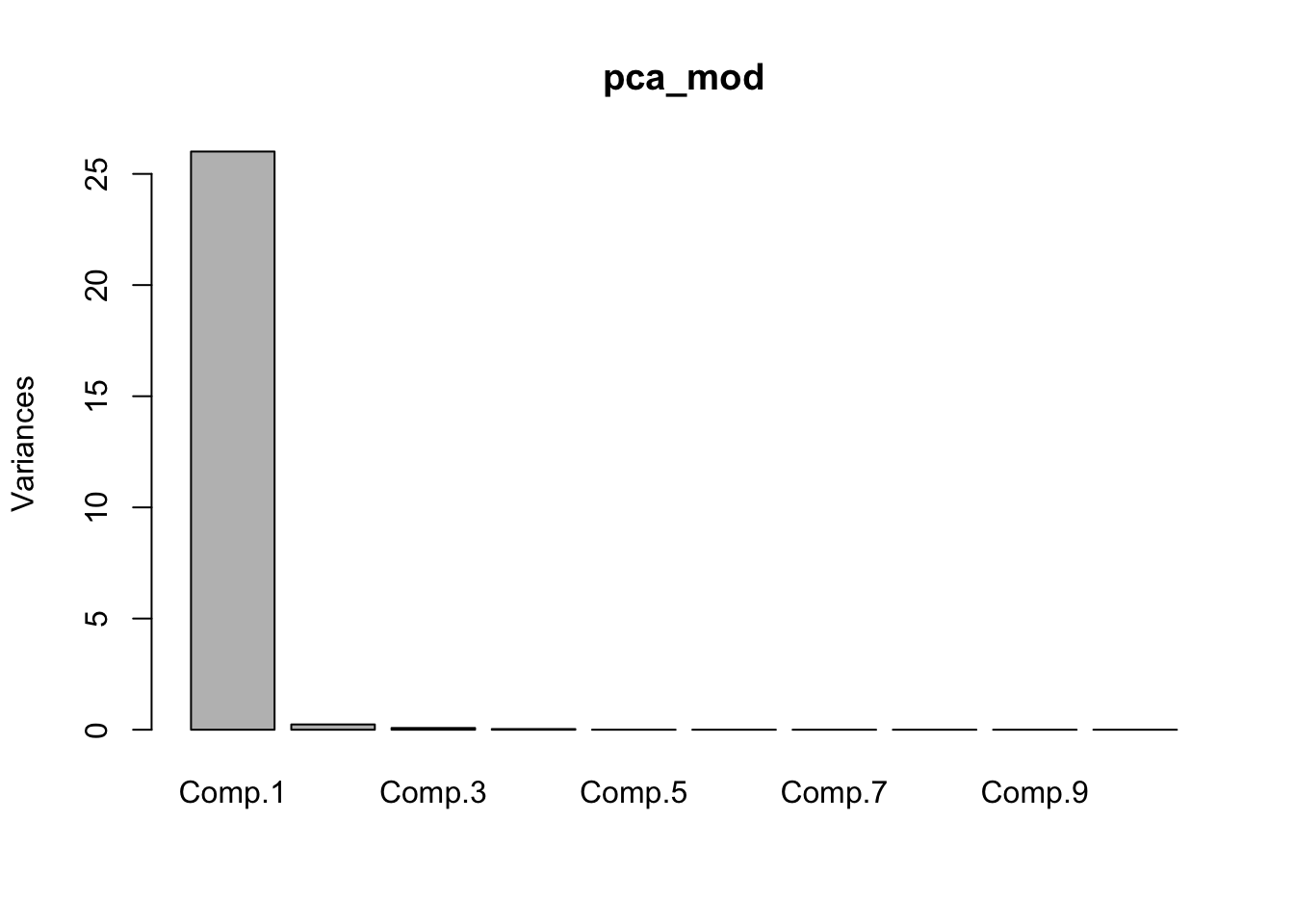

5.4 Aside on PCA

Above, we gave an intuitive idea about what PCA does. The first direction “maximizes the variance” of the data in that direction. The second direction maximizes the variance of the data from among all directions that are orthogonal to the first direction. What does that mean? Let’s check to see whether we really believe that, and see a bit more about how this important dimension reduction technique works!

We start by creating a 3 dimensional data set that is not independent. We will use the mvrnorm from the MASS package, which implements sampling from a multivariate normal distribution. We need a covariance matrix that describes the variance and covariance of the three dimensions.

## [,1] [,2] [,3]

## [1,] 12.126443 2.5831222 1.3146919

## [2,] 2.583122 1.5207420 0.3722671

## [3,] 1.314692 0.3722671 1.9513143Multiplying a matrix by its transpose leads to a symmetric positive semidefinite matrix, which is a valid covariance matrix. (What is positive semidefinite, you ask? Well, it just means that \(<Ax, x> \ge 0\) for all vectors \(x\).)

## [1] TRUESeems to be true. Now, we build a random sample of 1000 points, then we center them.

dat <- MASS::mvrnorm(1000, mu = c(0,0,0), Sigma = cov_mat)

library(caret)

dat <- as.data.frame(dat)

names(dat) <- c("x", "y", "z")

dat <- predict(preProcess(dat, method = c("center", "scale")),

newdata = dat)OK, let’s visualize this data set to see if it looks like there is one direction that is of maximum variance.

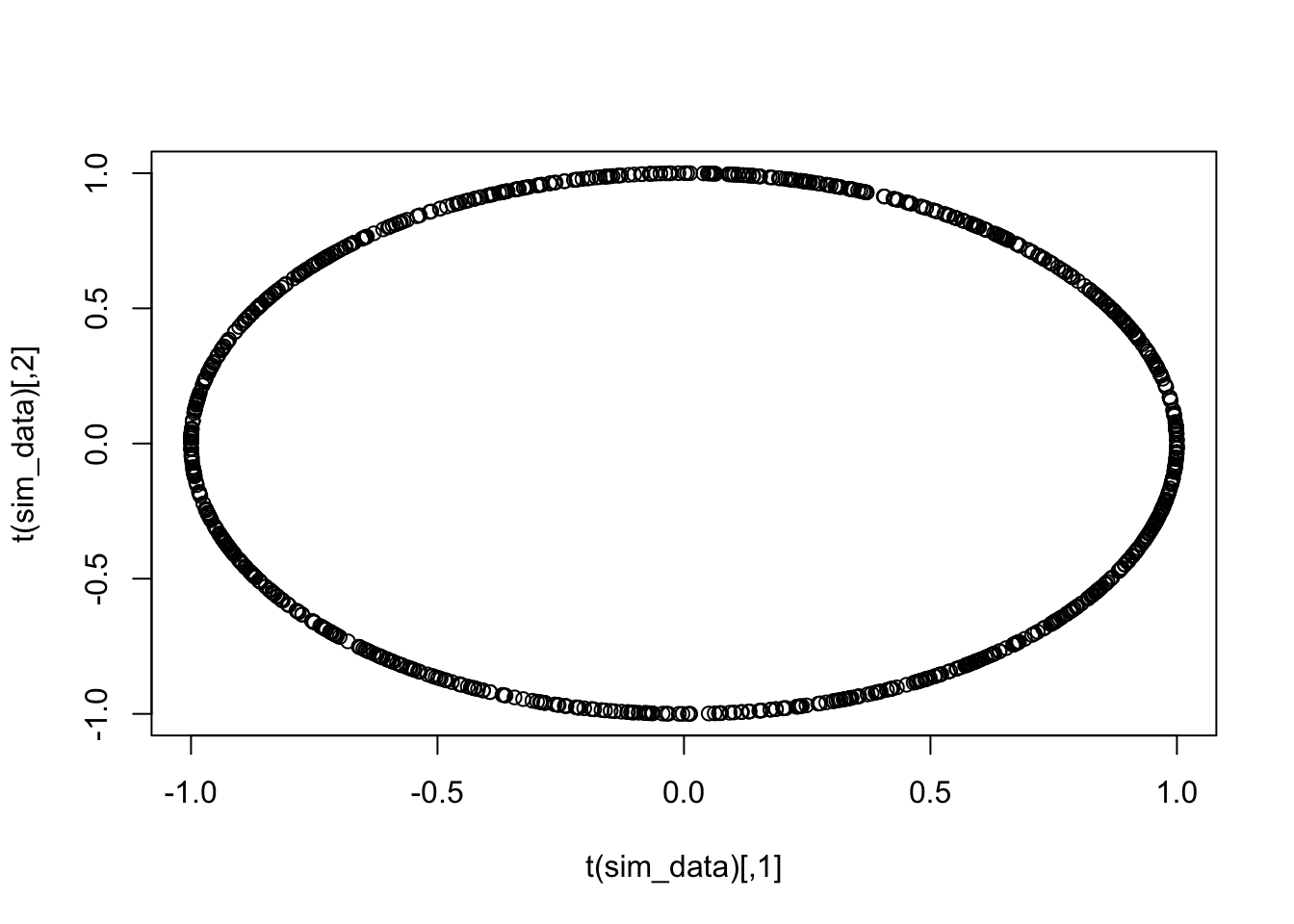

By rotating the figure around, it is pretty clear what is probably the direction of largest variance. But, let’s see how we can do that by hand! We first note that the projection of a vector \(x\) onto the subspace spanned by \(w\) when \(\|w\| = 1\) is given by \[ Px = \langle w, x\rangle w \] It is also an interesting fact that we can randomly sample from the unit sphere by taking a random sample of normal random variables and normalizing. Like this.

## [1] 0.33186776 0.07912053 0.94000199Let’s check on the unit circle that this looks like uniform sampling on the circle.

So, here is our plan for finding approximately the direction with biggest variance. We randomly sample from the sphere, project the point cloud onto the line spanned by the random sample, and then compute the variance. We do this a bunch of times and return the biggest value. We first do it a single time. Let’s define norm to make things a bit more transperent and do it.

norm <- function(x) {

sqrt(sum(x^2))

}

vec <- rnorm(3)

vec <- vec/norm(vec)

mags <- apply(dat,

1,

function(x) norm(ip(x, vec) * vec) * sign(ip(x, vec)))

#' This is not necessary. Since vec is norm 1, this is ip(x, vec)!

var(mags)## [1] 1.129834So, the current largest variance direction is given by vec and the largest variance is given by var(mags). We just repeat this a bunch of times.

largest_variance <- var(mags)

largest_direction <- vec

for(i in 1:1000) {

vec <- rnorm(3)

vec <- vec/norm(vec)

mags <- apply(dat,

1,

function(x) norm(ip(x, vec) * vec) * sign(ip(x, vec)))

if(var(mags) > largest_variance) {

largest_variance <- var(mags)

largest_direction <- vec

print(i)

}

}## [1] 1

## [1] 2

## [1] 5

## [1] 9

## [1] 24

## [1] 56

## [1] 87

## [1] 166OK, we see that the direction of the largest variance is 0.671, 0.643, 0.37. Let’s add a line in that direction to the scatterplot we already had.

df2 <- data.frame(x = largest_direction[1],

y = largest_direction[2],

z = largest_direction[3])

mm <- seq(-6, 6, length.out = 100)

df2 <- bind_rows(lapply(mm, function(x) x * df2))

plot_ly() %>%

add_trace(data=dat,

x = ~x,

y = ~y,

z = ~z,

type = "scatter3d") %>%

add_trace(data=df2,

x = ~x,

y = ~y,

z = ~z,

mode = "line")## No scatter3d mode specifed:

## Setting the mode to markers

## Read more about this attribute -> https://plot.ly/r/reference/#scatter-mode## No trace type specified:

## Based on info supplied, a 'scatter3d' trace seems appropriate.

## Read more about this trace type -> https://plot.ly/r/reference/#scatter3dNow let’s compare this to the princomp function in R.

##

## Loadings:

## Comp.1 Comp.2 Comp.3

## x 0.672 0.189 0.716

## y 0.657 0.294 -0.694

## z 0.342 -0.937

##

## Comp.1 Comp.2 Comp.3

## SS loadings 1.000 1.000 1.000

## Proportion Var 0.333 0.333 0.333

## Cumulative Var 0.333 0.667 1.000We see that the principle direction is (0.67, 0.66, 0.34) and the second direction is (0.203, 0.274, -0.94). Let’s plot those two on our scatter plot and see.

df3 <- as.data.frame(t(pca_mod$loadings[,2]))

df3 <- bind_rows(lapply(mm, function(x) x * df3))

plot_ly() %>%

add_trace(data=dat,

x = ~x,

y = ~y,

z = ~z,

type = "scatter3d") %>%

add_trace(data=df2,

x = ~x,

y = ~y,

z = ~z,

mode = "line") %>%

add_trace(data = df3, x = ~x, y = ~y, z = ~z,

mode = "line")## No scatter3d mode specifed:

## Setting the mode to markers

## Read more about this attribute -> https://plot.ly/r/reference/#scatter-mode## No trace type specified:

## Based on info supplied, a 'scatter3d' trace seems appropriate.

## Read more about this trace type -> https://plot.ly/r/reference/#scatter3d

## No trace type specified:

## Based on info supplied, a 'scatter3d' trace seems appropriate.

## Read more about this trace type -> https://plot.ly/r/reference/#scatter3dIf you have had linear algebra, it may make sense to you that the principle components are the eigenvectors of the covariance matrix of the data. But probably not, because libear algebra doesn’t normally cover the interesting things.

## eigen() decomposition

## $values

## [1] 1691.5223 910.6414 394.8363

##

## $vectors

## [,1] [,2] [,3]

## [1,] -0.6720924 -0.1890762 0.71592035

## [2,] -0.6569602 -0.2937831 -0.69433051

## [3,] -0.3416067 0.9369854 -0.07323383The largest eigenvalue corresponds to the first principle component, and so on. Note that the we can multiply a vector by negative 1 and that doesn’t change the direction that we are talking about, and some of these (all?) have been multiplied by negative one relative to the principle components given by princomp.

5.5 Johnson-Lindenstrauss Dimension Reduction

PCA is pretty cool. But, before we congratulate ourselves too much on being clever, let’s look at the following. Suppose that, instead of carefully choosing the principle components and re-writing the data in that basis, we randomly chose a subspace of an appropriate dimension and projected down onto that. It turns out that random projections also work, at least with very high probability. This is the idea behind Johnson-Lindenstrauss dimension reduction. To illustrate, let’s again use the tecator data set. We treat it as a matrix and then multiply by a matrix of random standard normal rvs of appropriate dimension. Then we use that new matrix as our predictors.

data("tecator")

N <- 10

train_indices <- sample(1:215, 150)

test_indices <- (1:215)[-train_indices]

train <- absorp[train_indices,]

test <- absorp[test_indices,]

jl_mat <- matrix(rnorm(N * ncol(train)), ncol = N)

new_train <- train %*% jl_mat

new_test <- test %*% jl_mat

new_train <- as.data.frame(new_train)

new_test <- as.data.frame(new_test)

new_train$moisture <- endpoints[train_indices,1]

mod <- lm(moisture ~ ., data = new_train)

errs <- predict(mod, newdata = new_test) - endpoints[test_indices,1]

sqrt(mean(errs^2))## [1] 2.693671Now we replicate this to estimate RMSE.

ss <- replicate(100, {

train_indices <- sample(1:215, 150)

test_indices <- (1:215)[-train_indices]

train <- absorp[train_indices,]

test <- absorp[test_indices,]

jl_mat <- matrix(rnorm(N * ncol(train)), ncol = N)

new_train <- train %*% jl_mat

new_test <- test %*% jl_mat

new_train <- as.data.frame(new_train)

new_test <- as.data.frame(new_test)

new_train$moisture <- endpoints[train_indices,1]

mod <- lm(moisture ~ ., data = new_train)

errs <- predict(mod, newdata = new_test) - endpoints[test_indices,1]

mean(errs^2)

})

mean(ss)## [1] 7.261868We see that taking a random projection onto 10 dimensions yields an esimated MSE of 7, which isn’t that different from what we were getting by carefully choosing the projection via PCA.

Exercise 5.3 a. Do Exercise 6.3, parts (a-d) in the Applied Predictive Modeling book using Principle Components Regression with the number of components kept as the tuning parameter.

- Repeat, using JL regression with the reduced dimension size as the tuning parameter. This one will have to be coded up by hand.