Chapter 3 Random Variables

A statistical experiment produces an outcome in a sample space, but frequently we are more interested in a number that summarizes that outcome. For example, if we randomly select a person with a fever and provide them with a dosage of medicine, the sample space might be the set of all people who currently have a fever, or perhaps the set of all possible people who could currently have a fever. However, we are more interested in the summary value of “how much did the temperature of the patient decrease.” This is a random variable.

Definition Let \(S\) be the sample space of an experiment. A random variable is a function from \(S\) to the real line. Random variables are usually denoted by a capital letter.

Suppose \(X\) is a random variable. The events of interest for \(X\) are those that can be defined by a set of real numbers. For example, \(X = 2\) is the event consisting of all outcomes \(s \in S\) with \(X(s) = 2\). Similarly, \(X > 8\) is the event consisting of all outcomes \(s \in S\) with \(X(s) > 8\). In general, if \(U \subset \mathbb{R}\): \[ X \in U \text{ is the event } \{s \in S\ |\ X(s) \in U\} \]

Example Suppose that three coins are tossed. The sample space is \[ S = \{HHH, HHT, HTH, HTT, THH, THT, TTH, TTT\}, \] and all eight outcomes are equally likely, each occurring with probability 1/8. A natural random variable here is the number of heads observed, which we will call \(X\). As a function from \(S\) to the real numbers, \(X\) is given by:

\[ X(HHH) = 3 \\ X(HHT) = X(HTH) = X(THH) = 2 \\ X(TTH) = X(THT) = X(HTT) = 1\\ X(TTT) = 0 \]

The event \(X = 2\) is the set of outcomes \(\{HHT, HTH, THH\}\) and so: \[ P(X = 2) = P(\{HHT, HTH, THH\}) = \frac{3}{8}. \]

It is often easier, both notationally and for doing computations, to hide the sample space and focus only on the random variable. We will not always explicitly define the sample space of an experiment. It is easier, more intuitive and (for the purposes of this book) equivalent to just understand \(P(a < X < b)\) for all choices of \(a < b\). By understanding these probabilities, we can derive many useful properties of the random variable, and hence, the underlying experiment.

We will consider two types of random variables in this book. Discrete random variables are integers, and often come from counting something. Continuous random variables take values in an interval of real numbers, and often come from measuring something. Working with discrete random variables requires summation, while continuous random variables require integration. We will discuss these two types of random variable separately in this chapter.

3.1 Discrete random variables

A discrete random variable is a random variable that takes integer values5. A discrete random variable is characterized by its probability mass function (pmf). The pmf \(p\) of a random variable \(X\) is given by \[ p(x) = P(X = x). \]

The pmf may be given in table form or as an equation. Knowing the probability mass function determines the discrete random variable, and we will see that we “understand” the random variable when we know its pmf.

Probability mass functions satisfy the following properties:

Theorem 3.1 Let \(p\) be the probability mass function of \(X\).

- \(p(n) \ge 0\) for all \(n\).

- \(\sum_n p(n) = 1\).

To check that a function is a pmf, we check that all of its values are probabilities, and that those values sum to one.

Example The Eurasian lynx is a wild cat that lives in the north of Europe and Asia. When a female lynx gives birth, she may have from 1-4 kittens. Our statistical experiment is a lynx giving birth, and the outcome is a litter of baby lynx. Baby lynx are complicated objects, but there is a simple random variable here: the number of kittens. Call this \(X\). Ecologists have estimated6 the pmf for \(X\) to be: \[ \begin{array}{l|cccc} x & 1 & 2 & 3 & 4 \\ \hline p(x) & 0.18 & 0.51 & 0.27 & 0.04 \end{array} \]

In other words, the probability that a lynx mother has one kitten is 0.18, the probability that she has two kittens is 0.51, and so on. Observe that \(p(0) + p(1) + p(2) + p(3) = 1\), so this is a pmf.

We can use the pmf to calculate the probability of any event defined in terms of \(X\). The probability that a lynx litter has more than one kitten is: \[ P( X > 1 ) = P(X=2) + P(X=3) + P(X=4) = 0.51 + 0.27 + 0.04 = 0.82. \]

We can simulate this random variable without having to capture pregnant lynx. Recall that the R function sample has four arguments: the possible outcomes x, the sample size size to sample, whether we are sampling with replacement replace, and the probability prob associated with the possible outcomes. Here we generate values of \(X\) for 30 lynx litters:

## [1] 3 3 3 4 2 2 1 3 2 1 2 3 2 2 2 2 2 2 3 3 2 1 3 2 3 2 2 2 2 3With enough samples of \(X\), we can approximate the probability \(P(X > 1)\) as follows:

## [1] 0.8245In this code, the first line simulates the random variable. The code X > 1 produces a vector of TRUE and FALSE which is TRUE when the event \(X > 1\) occurs. Recall that taking the mean of a TRUE/FALSE vector gives the proportion of times that vector is TRUE, which will be approximately \(P(X > 1)\) here.

We can also recreate the pmf by using table to count values and then dividing by the sample size:

## X

## 1 2 3 4

## 0.1755 0.5175 0.2662 0.0408Example Let \(X\) denote the number of Heads observed when a coin is tossed three times.

In this example, we can simulate the random variable by first simulating experiment outcomes and then calculating \(X\) from those. The following generates three coin flips:

Now we calculate how many heads were flipped, and produce one value of \(X\).

## [1] 2Finally, we can use replicate to produce many samples of \(X\):

X <- replicate(10000, {

coin_toss <- sample(c("H", "T"), 3, replace = TRUE)

sum(coin_toss == "H")

})

head(X, 30) # see the first 30 values of X## [1] 3 2 1 2 0 1 3 0 0 2 2 0 0 2 3 3 1 3 1 2 1 2 2 1 3 2 2 0 1 1From the simulation, we can estimate the pmf using table and dividing by the number of samples:

## X

## 0 1 2 3

## 0.1267 0.3741 0.3699 0.1293Instead of simulation, we could also calculate the pmf by considering the sample space, which consists of the eight equally likely outcomes HHH, HHT, HTH, HTT, THH, THT, TTH, TTT. Counting heads, we find that \(X\) has the pmf:

\[ \begin{array}{l|cccc} x & 0 & 1 & 2 & 3 \\ \hline p(x) & \frac{1}{8} & \frac{3}{8} & \frac{3}{8} & \frac{1}{8} \end{array} \]

which matches the results of our simulation. Here is an alternate description of \(p\) as a formula: \[ p(x) = {\binom{3}{x}} \left(\frac{1}{2}\right)^x \qquad x = 0,\ldots,3 \]

We always assume that \(p\) is zero for values not mentioned; both in the table version and in the formula version.

As in the lynx example, we may simulate this random variable directly by sampling with probabilities given by the pmf. Here we sample 30 values of \(X\) without “flipping” any “coins”:

## [1] 1 3 1 3 1 1 1 1 1 0 1 2 2 1 1 1 2 1 0 1 1 1 0 1 3 2 0 2 2 1Example Compute the probability that we observe at least one head when three coins are tossed.

Let \(X\) be the number of heads. We want to compute the probability of the event \(X \ge 1\). Using the pmf for \(X\),

\[\begin{align*} P(1 \le X) &= P(1 \le X \le 3)\\ &=P(X = 1) + P(X = 2) + P(X = 3)\\ &= \frac{3}{8}+ \frac{3}{8} +\frac{1}{8}\\ &= \frac{7}{8} = 0.875. \end{align*}\]

We could also estimate \(P(X \ge 1)\) by simulation:

X <- replicate(10000, {

coin_toss <- sample(c("H", "T"), 3, replace = TRUE)

sum(coin_toss == "H")

})

mean(X >= 1)## [1] 0.8708Example Suppose you toss a coin until the first time you see heads. Let \(X\) denote the number of tails that you see. We will see later that the pmf of \(X\) is given by \[ p(x) = \left(\frac{1}{2}\right)^{x + 1} \qquad x = 0, 1, 2, 3, \ldots \] Compute \(P(X = 2)\), \(P(X \le 1)\), \(P(X > 1)\), and the conditional probability \(P(X = 2 | X > 1)\).

- To compute \(P(X = 2)\), we just plug in \(P(X = 2) = p(2) = \left(\frac{1}{2}\right)^3 = \frac{1}{8}\).

- To compute \(P(X \le 1)\) we add \(P(X = 0) + P(X = 1) = p(0) + p(1) = \frac{1}{2} + \frac{1}{4} = \frac{3}{4}\).

- The complement of \(X > 1\) is \(X \le 1\), so \[ P(X > 1) = 1 - P(X \le 1) = 1 - \frac{3}{4} = \frac{1}{4}. \] Alternately, we could compute an infinite sum using the formula for the geometric series: \[ P(X > 1) = p(2) + p(3) + p(4) + \dotsb = \frac{1}{8} + \frac{1}{16} + \frac{1}{32} + \dotsb = \frac{1}{4}. \]

- The formula for conditional probability gives: \[ P(X = 2 | X > 1) = \frac{P(X = 2 \cap X > 1)}{P(X > 1)} = \frac{P(X = 2)}{P(X > 1)} = \frac{1/8}{1/4} = \frac{1}{2}.\] This last answer makes sense because \(X > 1\) requires the first two flips to be tails, and then there is a \(\frac{1}{2}\) chance your third flip will be heads and achieve \(X = 2\).

3.2 Expected value

Suppose you perform a statistical experiment repeatedly, and observe the value of a random variable \(X\) each time. The average of these observations will (under most circumstances) converge to a fixed value as the number of observations becomes large. This value is the expected value of \(X\), written \(E[X]\).

Theorem 3.2 (The Law of Large Numbers) The mean of \(n\) observations of a random variable \(X\) converges to the expected value \(E[X]\) as \(n \to \infty\).

Another word for the expected value of \(X\) is the mean of \(X\).

Example Using simulation, we determine the expected value of a die roll. Here are 30 observations and their average:

## [1] 2 6 1 2 5 4 1 2 1 1 6 5 2 5 6 3 6 4 5 1 5 2 2 3 4 6 5 2 5 6## [1] 3.6The mean appears to be somewhere between 3 and 4. Using more trials gives more accuracy:

## [1] 3.49819Not surprisingly, the mean value is balanced halfway between 1 and 6, at 3.5.

Using the probability distribution of a random variable \(X\), one can compute the expected value \(E[X]\) exactly:

Example Let \(X\) be the value of a six-sided die roll. Since the probability of each outcome is \(\frac{1}{6}\), we have: \[ E[X] = 1\cdot\frac{1}{6} + 2\cdot\frac{1}{6} +3\cdot\frac{1}{6} +4\cdot\frac{1}{6} +5\cdot\frac{1}{6} +6\cdot\frac{1}{6} = \frac{21}{6} = 3.5 \]

Example Let \(X\) be the number of kittens in a Eurasian lynx litter. Then \[ E[X] = 1\cdot p(1) + 2 \cdot p(2) + 3 \cdot p(3) + 4 \cdot p(4) = 0.18 + 2\cdot 0.51 + 3\cdot0.27 + 4\cdot0.04 = 2.17 \] This means that, on average, the Eurasian lynx has 2.17 kittens.

We can perform the computation of \(E[X]\) using R:

## [1] 2.17Alternatively, we may estimate \(E[X]\) by simulation, using the Law of Large Numbers.

## [1] 2.1705Example Let \(X\) denote the number of heads observed when three coins are tossed. The pmf of \(X\) is given by \(p(x) = {\binom{3}{x}} (1/2)^x\), where \(x = 0,\ldots,3\). The expected value of \(X\) is

\[ E[X] = 0 \cdot \frac{1}{8} + 1 \cdot \frac{3}{8} + 2 \cdot \frac{3}{8} + 3 \cdot \frac{1}{8} = \frac{3}{2} . \]

We can check this with simulation:

X <- replicate(10000, {

coin_toss <- sample(c("H", "T"), 3, replace = TRUE)

sum(coin_toss == "H")

})

mean(X)## [1] 1.4901The answer is approximately 1.5, which is what our exact computation of \(E[X]\) predicted.

Example Consider the random variable \(X\) which counts the number of tails observed before the first head when a fair coin is repeatedly tossed. The pmf of \(X\) is \(p(x) = 0.5^{x + 1}\) for \(x = 0, 1, 2, \ldots\). Finding the expected value requires summing an infinite series, which we leave as an exercise. Instead, we proceed by simulation.

We assume (see the next paragraph for a justification) that the infrequent results of \(x \ge 100\) do not impact the expected value much. This assumption needs to be checked, because it is certainly possible that low probability events which correspond to very high values of the random variable could have a large impact on the expected value. Then, we take a sample of size 10000 and follow the same steps as in the previous example.

## [1] 0.997We estimate that the expected value \(E[X] \approx 1\).

To justify that we do not need to include values of \(x\) bigger than 99, note that

\[\begin{align*} E[X] - \sum_{n = 0}^{99} n .5^{n + 1} &= \sum_{n=100}^\infty n .5^{n + 1} \\ &< \sum_{n=100}^\infty 2^{n/2 - 1} .5^{n + 1}\\ &= \sum_{n = 100}^\infty 2^{-n/2}\\ &= 2^{-50} \frac{1}{2^{49}(2 - \sqrt{2})} < 10^{-14} \end{align*}\]

So, truncating the sum at \(n = 99\) introduces a negligible error.

We end this short section with an example of a discrete random variable that has expected value of \(\infty\). Let \(X\) be a random variable such that \(P(X = 2^x) = 2^{-x}\) for \(x = 1,2,\ldots\). We see that \(\sum_{n=1}^\infty n p(n) = \sum_{n=1}^\infty 2^n 2^{-n} = \infty\). If we truncated the sum associated with \(E[X]\) for this random variable at any finite point, then we would introduce a very large error!

3.3 Binomial and geometric random variables

The binomial and geometric random variables are common and useful models for many real situations. Both involve Bernoulli trials, named after the 17th century Swiss mathematician Jacob Bernoulli.

Examples

Toss a coin. Arbitrarily define heads to be success. Then \(p = 0.5\).

Shoot a free throw, in basketball. Success would naturally be making the shot, failure missing the shot. Here \(p\) varies depending on who is shooting. An excellent basketball player might have \(p = 0.8\).

Ask a randomly selected voter whether they support a ballot proposition. Here success would be a yes vote, failure a no vote, and \(p\) is likely unknown but of interest to the person doing the polling.

Roll a die, and consider success to be rolling a six. Then \(p = 1/6\).

A Bernoulli process is a sequence (finite or infinite) of repeated, identical, independent Bernoulli trials. Repeatedly tossing a coin is a Bernoulli process. Repeatedly trying to roll a six is a Bernoulli process. Is repeated free throw shooting a Bernoulli process? There are two reasons that it might not be. First, if the person shooting is a beginner, then they would presumably get better with practice. That would mean that the probability of a success is not the same for each trial (especially if the number of trials is large), and the process would not be Bernoulli. Second, there is the more subtle question of whether free throws are independent. Does a free throw shooter get “hot” and become more likely to make the shot following a success? There is no way to know for sure, although research7 suggests that repeated free throws are independent and that modeling free throws of experienced basketball players with a Bernoulli process is reasonable.

This section discusses two discrete random variables coming from a Bernoulli process: the binomial random variable which counts the number of successes in a fixed number of trials, and the geometric random variable, which counts the number of trials before the first success.

3.3.1 Binomial

The most important example of a binomial random variable comes from counting the number of successes in a Bernoulli process of length \(n\). In other words, if \(X\) counts the number of successes in \(n\) independent and identically distributed Bernoulli trials, each with probability of success \(p\), then \(X \sim \text{Binom}(n, p)\). Indeed, many texts define binomial random variables in this manner, and we will use this alternative definition of a binomial random variable whenever it is convenient for us to do so.

One way to obtain a Bernoulli process is to sample with replacement from a population. If an urn contains 5 white balls and 5 red balls, and you count drawing a red ball as a “success”, then repeatedly drawing balls will be a Bernoulli process as long as you replace the ball back in the urn after you draw it. When sampling from a large population, we can sample without replacement and the resulting count of successes will be approximately binomial.

Example Let \(X\) denote the number of heads observed when three coins are tossed. Then \(X \sim \text{Binom}(3,0.5)\) is a binomial random variable. Here \(n = 3\) because there are three independent Bernoulli trials, and \(p = 0.5\) because each coin has probability \(0.5\) of heads.

In R, the function dbinom provides the pmf of the binomial distribution:

dbinom(x, size = n, prob = p) gives \(P(X = x)\) for \(X \sim \text{Binom}(n,p)\). If we provide a vector of values for x, and a single value of size and prob, then dbinom will compute \(P(X = x)\) for all of the values in x. The idiom sum(dbinom(vec, size, prob)) will be used below, with vec containing distinct values, to compute the probability that \(X\) is in the set described by vec. This is a useful idiom for all of the discrete random variables.

Example For \(X\) the number of heads when three coins are tossed, the pmf is \(P(X = x) = \begin{cases} 1/8 & x = 0,3\\3/8 & x = 1,2 \end{cases}\).

Computing with R,

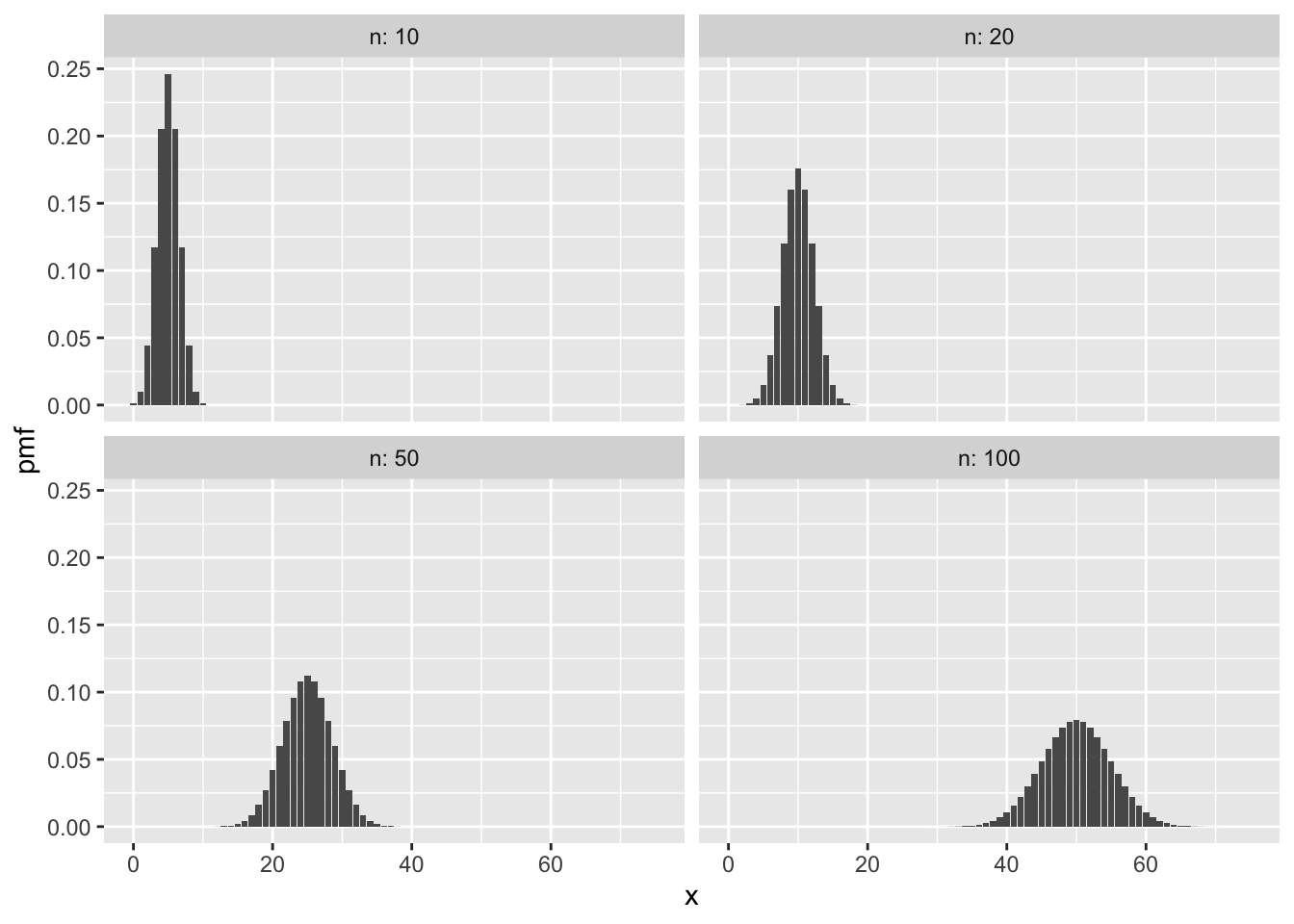

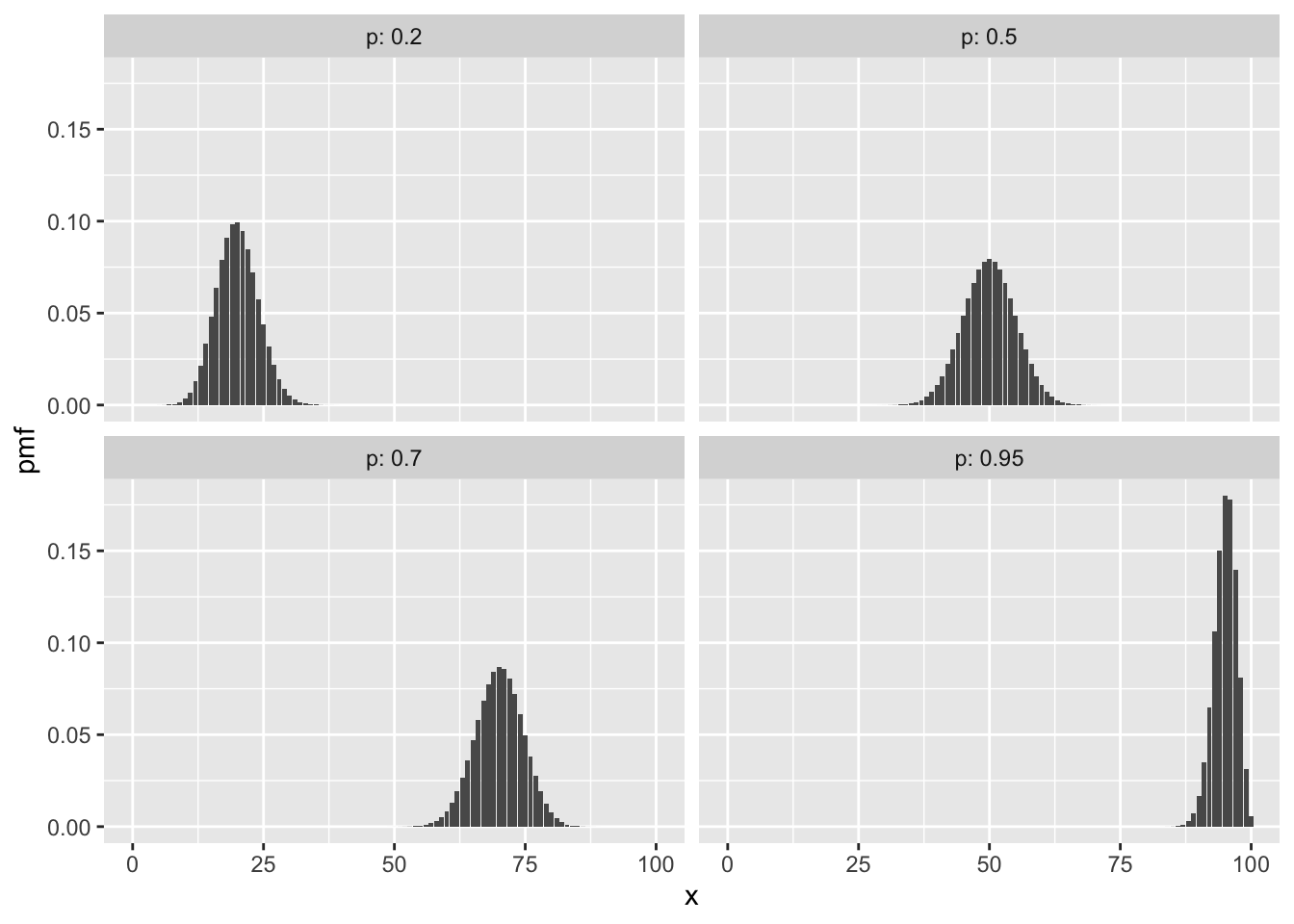

## [1] 0.125 0.375 0.375 0.125Here are some sample plots of the pmf of a binomial rv for various values of \(n\) and \(p= .5\).

Here are some with \(n = 100\) and various \(p\).

In these plots of binomial pmfs, the distributions are roughly balanced around a peak. The balancing point is the expected value of the random variable, which for binomial rvs is quite intuitive:

We will see a simple proof of this once we talk about the expected value of the sum of random variables. Here is a proof using the pmf and the definition of expected value directly.

Example Suppose 100 dice are thrown. What is the expected number of sixes? What is the probability of observing 10 or fewer sixes?

We assume that the results of the dice are independent and that the probability of rolling a six is \(p = 1/6\). The random variable \(X\) is the number of sixes observed, and \(X \sim \text{Binom}(100,1/6)\).

Then \(E[X] = 100 \cdot \frac{1}{6} \approx 16.67\). That is, we expect 1/6 of the 100 rolls to be a six.

The probability of observing 10 or fewer sixes is \[ P(X \le 10) = \sum_{j=0}^{10} P(X = j) = \sum_{j=0}^{10} {\binom{100}{j}}(1/6)^j(5/6)^{100-j} \approx 0.0427. \] In R,

## [1] 0.04269568R also provides the function pbinom, which is the cumulative sum of the pmf. The cumulative sum of a pmf is important enough that it gets its own name: the cumulative distribution function. We will say more about the cumulative distribution function in general in Section @ref{continuousrv}.

pbinom(x, size = n, prob = p) gives \(P(X \leq x)\) for \(X \sim \text{Binom}(n,p)\).

In the previous example, we could compute \(P(X \le 10)\) as

## [1] 0.04269568Example Suppose Alice and Bob are running for office, and 46% of all voters prefer Alice. A poll randomly selects 300 voters from a large population and asks their preference. What is the expected number of voters who will report a preference for Alice? What is the probability that the poll results suggest Alice will win?

Let “success” be a preference for Alice, and \(X\) be the random variable equal to the number of polled voters who prefer Alice. It is reasonable to assume that \(X \sim \text{Binom}(300,0.46)\) as long as our sample of 300 voters is a small portion of the population of all voters.

We expect that \(0.46 \cdot 300 = 138\) of the 300 voters will report a preference for Alice.

For the poll results to show Alice in the lead, we need \(X > 150\). To compute \(P(X > 150)\), we use \(P(X > 150) = 1 - P(X \leq 150)\) and then

## [1] 0.07398045There is about a 7.4% chance the poll will show Alice in the lead, despite her imminent defeat.

R provides the function rbinom to simulate binomial random variables. The first argument to rbinom is the number of random values to simulate, and the next arguments are size = n and prob = p. Here are 15 simulations of the Alice vs. Bob poll:

## [1] 132 116 129 139 165 137 138 142 134 140 140 134 134 126 149In this series of simulated polls, Alice appears to be losing in all except the fifth poll where she was preferred by \(165/300 = 55\%\) of the selected voters.

We can compute \(P(X > 150)\) by simulation

## [1] 0.0714which is close to our theoretical result that Alice should appear to be winning 7.4% of the time.

Finally, we can estimate the expected number of people in our poll who say they will vote for Alice and compare that to the value of 138 that we calculated above.

## [1] 137.93373.3.2 Geometric

Proof. The only way to achieve \(X = x\) is to have the first \(x\) trials result in failure and the next trial result in success. Each failure happens with probability \(1-p\), and the final success happens with probabilty \(p\). Since the trials are independent, we multiply \(1-p\) a total of \(x\) times, and then multiply by \(p\).

As a check, we show that the geometric pmf does sum to one. This requires summing an infinite geometric series: \[ \sum_{x=0}^{\infty} p(1-p)^x = p \sum_{x=0}^\infty (1-p)^x = p \frac{1}{1-(1-p)} = 1 \]

The functions dgeom, pgeom and rgeom are available for working with a geometric random variable \(X \sim \text{Geom}(p)\):

dgeom(x,p)is the pmf, and gives \(P(X = x)\)pgeom(x,p)gives \(P(X \leq x)\)rgeom(N,p)simulates \(N\) random values of \(X\).

Example A die is tossed until the first 6 occurs. What is the probability that it takes 4 or more tosses?

We define success as a roll of six, and let \(X\) be the number of failures before the first success. Then \(X \sim \text{Geom}(1/6)\), a geometric random variable with probability of success \(1/6\).

Taking 4 or more tosses corresponds to the event \(X \geq 3\). Theoretically,

\[

P(X \ge 3) = \sum_{x=3}^\infty P(X = x) = \sum_{x=3}^\infty \frac{1}{6}\cdot\left(\frac{5}{6}\right)^x = \frac{125}{216} \approx 0.58.

\]

We cannot perform the infinite sum with dgeom, but we can come close by summing to a large value of \(x\):

## [1] 0.5787037Another approach is to apply rules of probability to see that \(P(X \ge 3) = 1 - P(X < 3)\) Since \(X\) is discrete, \(X < 3\) and \(X \leq 2\) are the same event. Then \(P(X \ge 3) = 1 - P(X \le 2)\):

## [1] 0.5787037Rather than summing the pmf, we may use pgeom:

## [1] 0.5787037The function pgeom has an option lower.tail=FALSE which makes it compute \(P(X > x)\) rather than \(P(X \le x)\), leading to maybe the most concise method:

## [1] 0.5787037Finally, we can use simulation to approximate the result:

## [1] 0.581All of these show there is about a 0.58 probability that it will take four or more tosses to roll a six.

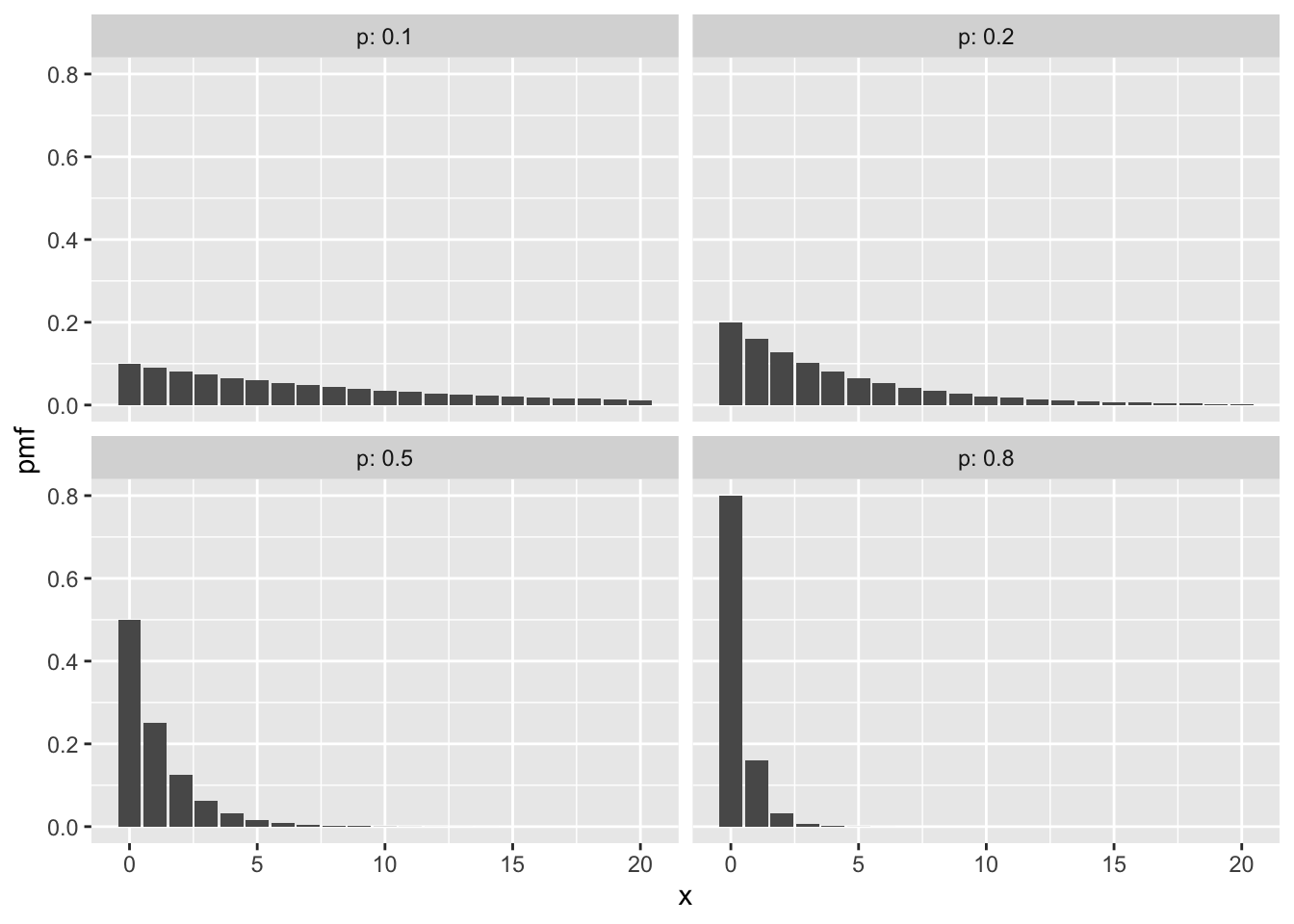

Here are some plots of geometric pmfs with various \(p\).

Observe that for smaller \(p\), we see that \(X\) is likely to be larger. The lower the probability of success, the more failures we expect before our first success.

Example Roll a die until a six is tossed. What is the expected number of rolls?

The expected number of failures is given by \(X \sim \text{Geom}(1/6)\), and so we expect \(\frac{5/6}{1/6} = 5\) failures before the first success. Since the number of total rolls is one more than the number of failures, we expect 6 rolls, on average, to get a six.

Example Professional basketball player Steve Nash was a 90% free throw shooter over his career. If Steve Nash starts shooting free throws, how many would he expect to make before missing one? What is the probability that he could make 20 in a row?

Let \(X\) be the random variable which counts the number of free throws Steve Nash makes before missing one. We model a Steve Nash free throw as a Bernoulli trial, but we choose “success” to be a missed free throw, so that \(p = 0.1\) and \(X \sim \text{Geom}(0.1)\). The expected number of “failures” is \(E[X] = \frac{0.9}{0.1} = 9\), which means we expect Steve to make 9 free throws before missing one.

To make 20 in a row requires \(X \ge 20\). Using \(P(X \ge 20) = 1 - P(X \le 19)\),

## [1] 0.1215767we see that Steve Nash could run off 20 (or more) free throws in a row about 12% of the times he wants to try.

3.4 Continuous random variables

A probability density function (pdf) is a function \(f\) such that:

- \(f(x) \ge 0\) for all \(x\).

- \(\int f(x)\, dx = 1\).

A continuous random variable \(X\) is a random variable described by a probability density function, in the sense that: \[ P(a \le X \le b) = \int_a^b f(x)\, dx. \] whenever \(a \le b\), including the cases \(a = -\infty\) or \(b = \infty\).

The cumulative distribution function (cdf) associated with \(X\) (either discrete or continuous) is the function \(F(x) = P(X \le x)\), or written out in terms of pdf’s and cdf’s:

\[ F(x) = P(X \le x) = \begin{cases} \int_{-\infty}^x f(x)\, dx& X \text{ is continuous}\\ \sum_{n = x}^\infty p(x) & X \text{ is discrete} \end{cases} \]

By the fundamental theorem of calculus, when \(X\) is continuous, \(F\) is a continuous function, hence the name continuous rv. The function \(F\) is sometimes referred to as the distribution function of \(X\).

One major difference between discrete rvs and continuous rvs is that discrete rv’s can take on only countably many different values, while continuous rvs typically take on values in an interval such as \([0,1]\) or \((-\infty, \infty)\).

Theorem 3.7 Let \(X\) be a continuous random variable with pdf \(f\) and cdf \(F\).

- \(\frac{d}{dx} F = f\).

- \(P(a \le X \le b) = F(b) - F(a)\)

- \(P(X \ge a) = 1 - F(a) = \int_a^\infty f(x)\, dx\)

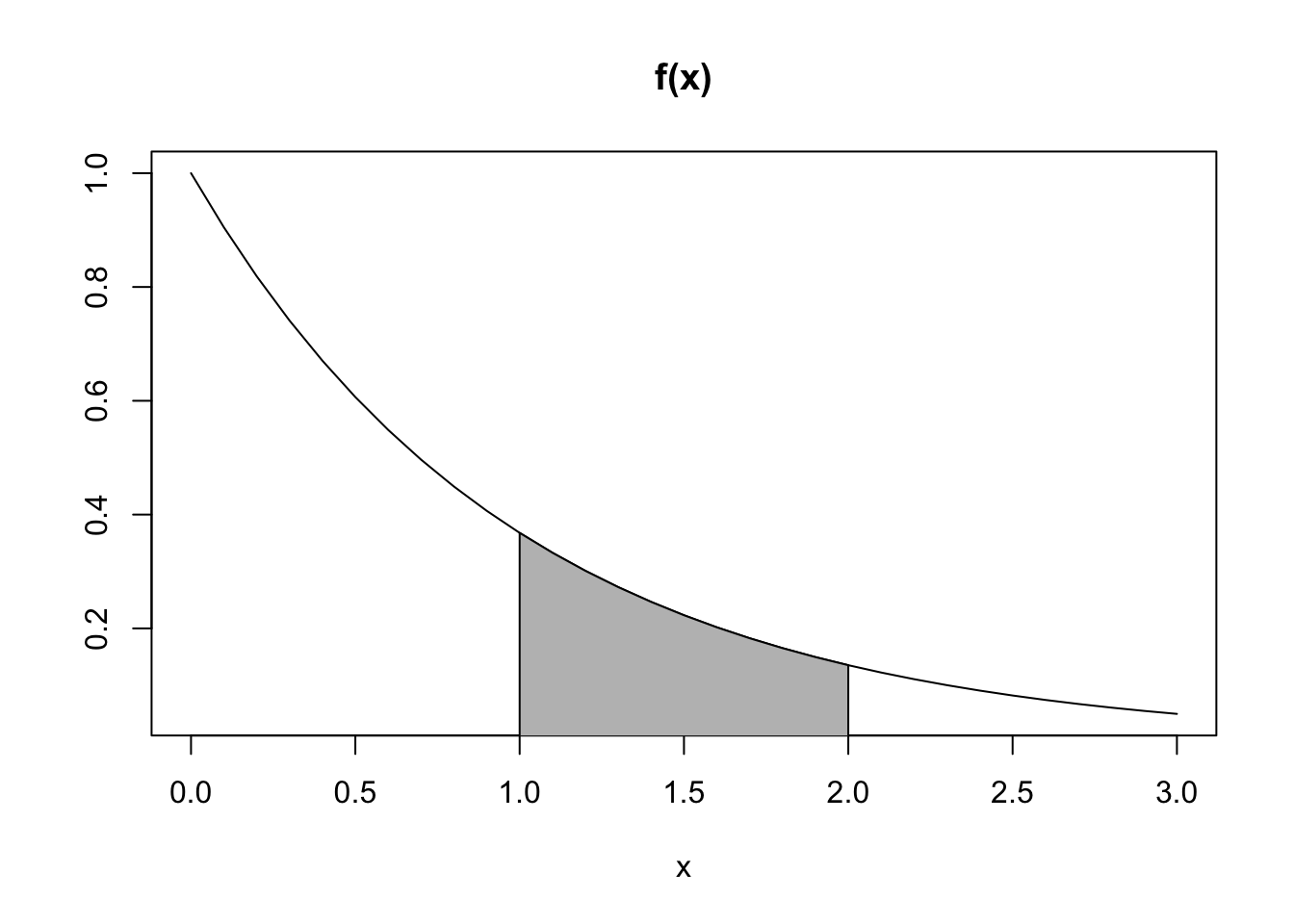

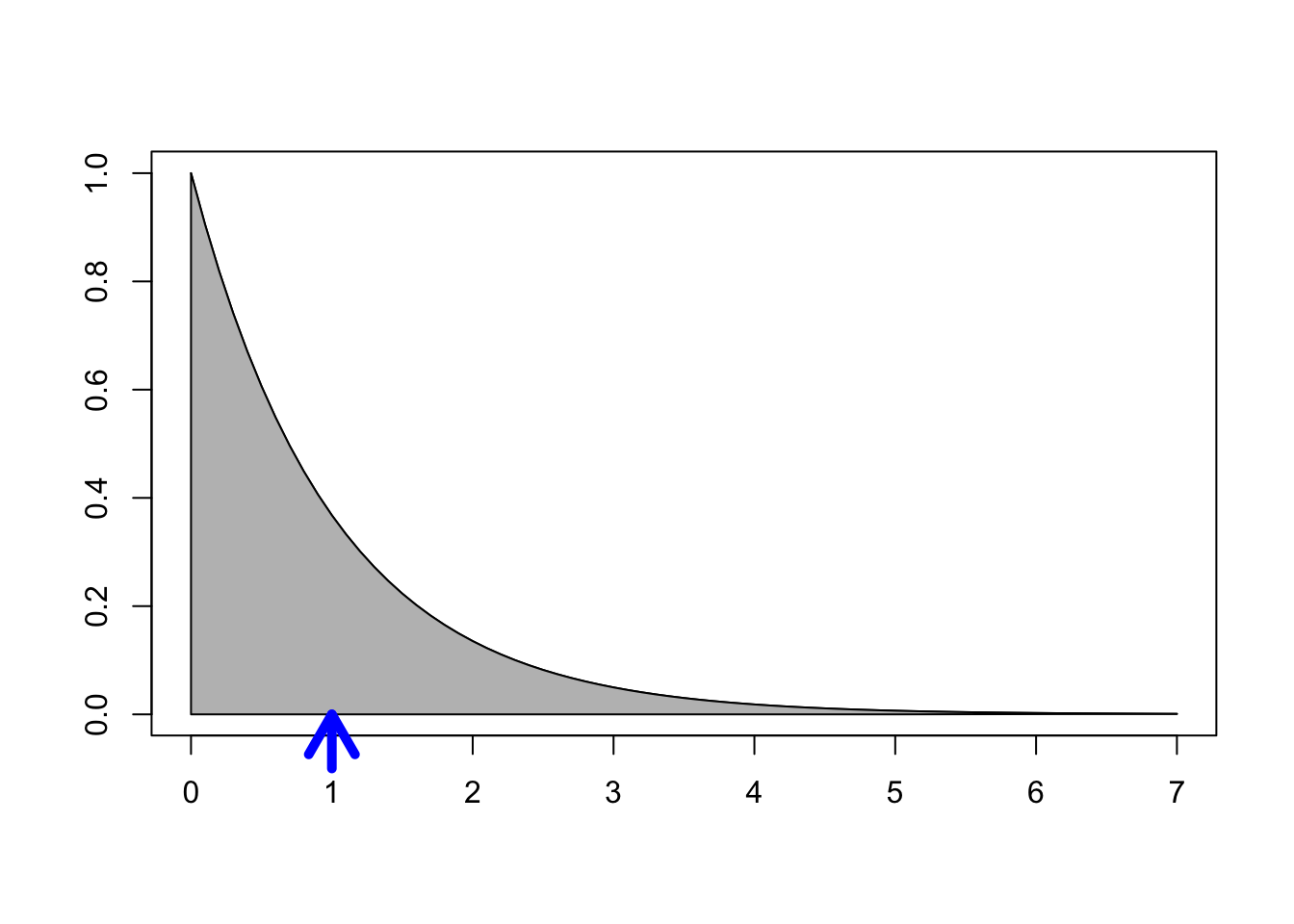

Example Suppose that \(X\) has pdf \(f(x) = e^{-x}\) for \(x > 0\), as graphed below.

Find \(P(1 \le X \le 2)\), which is the shaded area shown in the graph. By definition, \[ P(1\le X \le 2) = \int_1^2 e^{-x}\, dx = -e^{-x}\Bigl|_1^2 = e^{-1} - e^{-2} \approx .233 \]

Find \(P(X \ge 1| X \le 2)\). This is the conditional probability that \(X\) is greater than 1, given that \(X\) is less than or equal to 2. We have \[\begin{align*} P(X \ge 1| X \le 2) &= P( X \ge 1 \cap X \le 2)/P(X \le 2)\\ &=P(1 \le X \le 2)/P(X \le 2)\\ &=\frac{e^{-1} - e^{-2}}{1 - e^{-2}} \approx .269 \end{align*}\]

Example Let \(X\) have the pdf: \[ f(x) = \begin{cases} 0 & x < 0\\ 1 & 0 \le x \le 1\\ 0 & x \ge 1 \end{cases} \]

\(X\) is called the uniform random variable on the interval \([0,1]\), and realizes the idea of choosing a random number between 0 and 1.

Here are some simple probability computations involving \(X\): \[ \begin{aligned} P(X > 0.3) &= \int_{0.3}^{1}1\,dx = 0.7\\ \\ P(0.2 < X < 0.5) &= \int_{0.2}^{0.5}1\,dx = 0.3 \end{aligned} \]

The cdf for \(X\) is given by: \[ F(x) = \begin{cases} 0 & x < 0\\ x & 0\le x \le 1\\ 1 & x \ge 1 \end{cases} \]

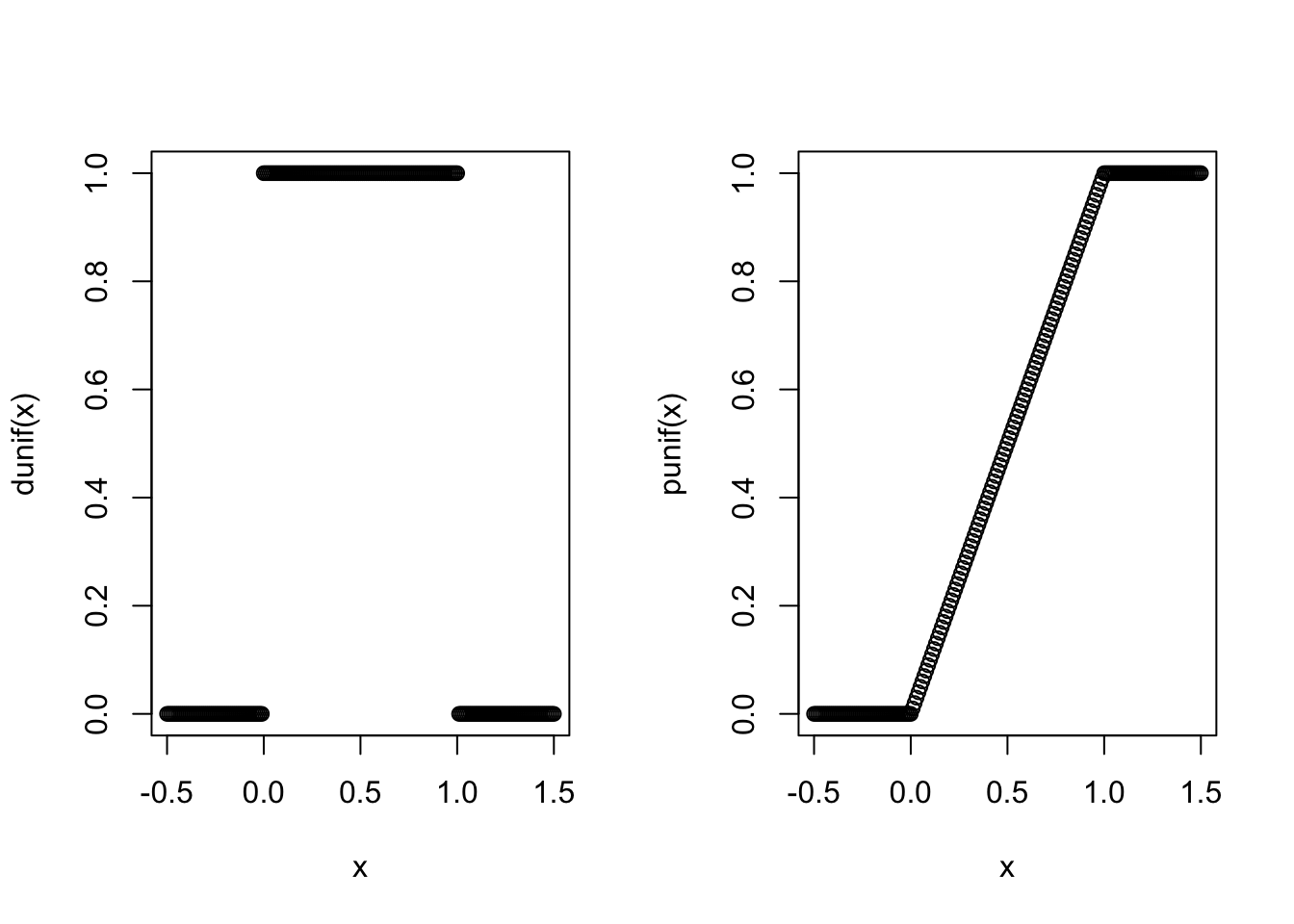

The pdf and cdf of \(X\) are implemented in R with the functions dunif and punif.

Here are plots of these functions:

We can produce simulated values of \(X\) with the function runif, and use those to estimate

probabilities:

## [1] 0.7041## [1] 0.3015Here, we have used the vectorized version of the and operator, &. It is important to distinguish between the operator & and the operator && when doing simulations, or any time that you are doing logical operations on vectors. If you use && on two vectors, then it ignores all of the values in each vector except the first one! For example,

## [1] TRUEBut, compare to

## [1] TRUE FALSE FALSEExample Suppose \(X\) is a random variable with cdf given by

\[ F(x) = \begin{cases} 0 & x < 0\\ x/2 & 0\le x \le 2\\ 1 & x \ge 2 \end{cases} \]

Find \(P(1 \le X \le 2)\). To do this, we note that \(P(1 \le X \le 2) = F(2) - F(1) = 1 - 1/2 = 1/2\).

Find \(P(X \ge 1/2)\). To do this, we note that \(P(X \ge 1/2) = 1 - F(1/2) = 1 - 1/4 = 3/4\).

To find the pdf associated with \(X\), we take the derivative of \(F\). Since pdf’s are only used via integration, it doesn’t matter how we define the pdf at the places where \(F\) is not differentiable. In this case, we set \(f\) equal to \(1/2\) at those places to get \[ f(x) = \begin{cases} 1/2 & 0 \le x \le 2\\ 0& \text{otherwise} \end{cases} \] This example is also a uniform random variable, this time on the interval \([0,2]\)

3.4.1 Expected value of a continuous random variable

Let \(X\) be a continuous random variable with pdf \(f\). The expected value of \(X\) is \[ E[X] = \int_{-\infty}^{\infty} x f(x)\, dx \]

Example Find the expected value of \(X\) when its pdf is given by \(f(x) = e^{-x}\) for \(x > 0\).

We compute \[ E[X] = \int_{-\infty}^{\infty}f(x) dx = \int_0^\infty x e^{-x} \, dx = \left(-xe^{-x} - e^{-x}\right)\Bigr|_0^\infty = 1 \] (Recall: to integrate \(xe^{-x}\) you use integration by parts.)

Example Find the expected value of the uniform random variable on \([0,1]\). Using integration, we get the exact result: \[ E[X] = \int_0^1 x \cdot 1\, dx = \frac{x^2}{2}\Biggr|_0^1 = \frac{1}{2} \]

Approximating with simulation,

## [1] 0.5024951The balance point is at \(X = 1/2\) for the uniform random variable on [0,1], since the pdf describes a square with base \([0,1]\).

For \(X\) with pdf \(f(x) = e^{-x}, x \ge 0\), the picture below shows \(E[X]=1\) as the balancing point for the shaded region:

3.5 Functions of a random variable

Recall that a random variable \(X\) is a function from the sample space \(S\) to \(\mathbb{R}\). Given a function \(g : \mathbb{R} \to \mathbb{R},\) we can form the random variable \(g \circ X\), usually written \(g(X)\).

For example, if \(X\) is the value from a six-sided die roll and \(g(x) = x^2\), then \(g(X) = X^2\) is the value of a single six-sided die roll, squared. Here \(X^2\) can take the values \(1, 4, 9, 16, 25, 36\), all equally likely.

The distribution of \(g(X)\) can be difficult to compute. However, we can often understand \(g(X)\) by using the pdf of \(X\) itself. The most important situation is to compute the expected value, \(E[g(x)]\):

\[ E\left[g(X)\right] = \begin{cases} \sum g(x) p(x) & X {\rm \ \ discrete} \\ \int g(x) f(x)\, dx & X {\rm \ \ continuous}\end{cases} \]

## [1] 15.0689dbinom gives the pdf for binomial rvs, we can perform this exact computation in R:

## [1] 0.75Example Let \(X\) be the uniform random variable on \([0,1]\), and let \(Y = 1/X\).

What is \(P(Y < 3)\)? The event \(Y < 3\) is the same as \(1/X < 3\) or \(X > 1/3\), so \(P(Y < 3) = P(X > 1/3) = 2/3\). We can check with simulation:

## [1] 0.6619On the other hand, the expected value of \(Y = 1/X\) is not well behaved. We compute: \[ E[1/X] = \int_0^1 \frac{1}{x} dx = \ln(x)\Bigr|_0^1 = \infty \] Small values of \(X\) are common enough that the huge values of \(1/X\) produced cause the expected value to be infinite. Let’s see what this does to simulations:

## [1] 4.116572## [1] 10.65163## [1] 22.27425Because the expected value is infinite, the simulations are not approaching a finite number as the size of the simulation increases. The reader is encouraged to try running these simulations multiple times to observe the inconsistency of the results.

Example 3.3 Compute \(E[X^2]\) for \(X\) that has pdf \(f(x) = e^{-x}\), \(x > 0\).

Using integration by parts: \[ E[X^2] = \int_0^\infty x^2 e^{-x}\, dx = \left(-x^2 e^{-x} - 2x e^{-x} - 2 e^{-x}\right)\Bigl|_0^\infty = 2. \]We conclude this section with two simple but important observations about expected values. First, that expected value is linear. Second, that the expected value of a constant is that constant. Stated precisely:

Theorem 3.8 For random variables \(X\) and \(Y\), and constants \(a\), \(b\), and \(c\):

- \(E[aX + bY] = aE[X] + bE[Y]\)

- \(E[c] = c\)

The proofs follow from the definition of expected value and the linearity of integration and summation, and are left as exercises for the reader. With these theorems in hand, though, we can provide a much simple proof for the formula of the expected value of a binomial random variable.

Let \(X \sim \text{Binom}(n, p)\). Then \(X\) is the number of successes in \(n\) Bernoulli trials, each with probability \(p\). Let \(X_1, \ldots, X_n\) be independent Bernoulli random variables with probability of success \(p\). That is, \(P(X_i = 1) = p\) and \(P(X_i = 0) = 1 - p\). It follows that \(X = \sum_{i = 1}^n X_i\). Therefore,

\[\begin{align*} E[X] &= E[\sum_{i = 1}^n X_i]\\ &= \sum_{i = 1}^n E[X_i] = \sum_{i = 1}^n p = np. \end{align*}\]

3.6 Variance and standard deviation

The variance of a random variable measures the spread of the variable around its expected value. Rvs with large variance can be quite far from their expected values, while rvs with small variance stay near their expected value. The standard deviation is simply the square root of the variance. The standard deviation also measures spread, but in more natural units which match the units of the random variable itself.

Note that the variance of an rv is always positive (in the French sense8), as it is the integral or sum of a positive function.

The next theorem gives a formula for the variance that is often easier than the definition when performing computations.

Example Let \(X \sim \text{Binom}(3,0.5)\). Here \(\mu = E[X] = 1.5\).

In Example 3.2, we saw that

\(E[(X-1.5)^2] = 0.75\). Then \(\text{Var}(X) = 0.75\) and the standard deviation is

\(\sigma(X) = \sqrt{0.75} \approx 0.866\). We can check both of these using simulation and

the built in R functions var and sd:

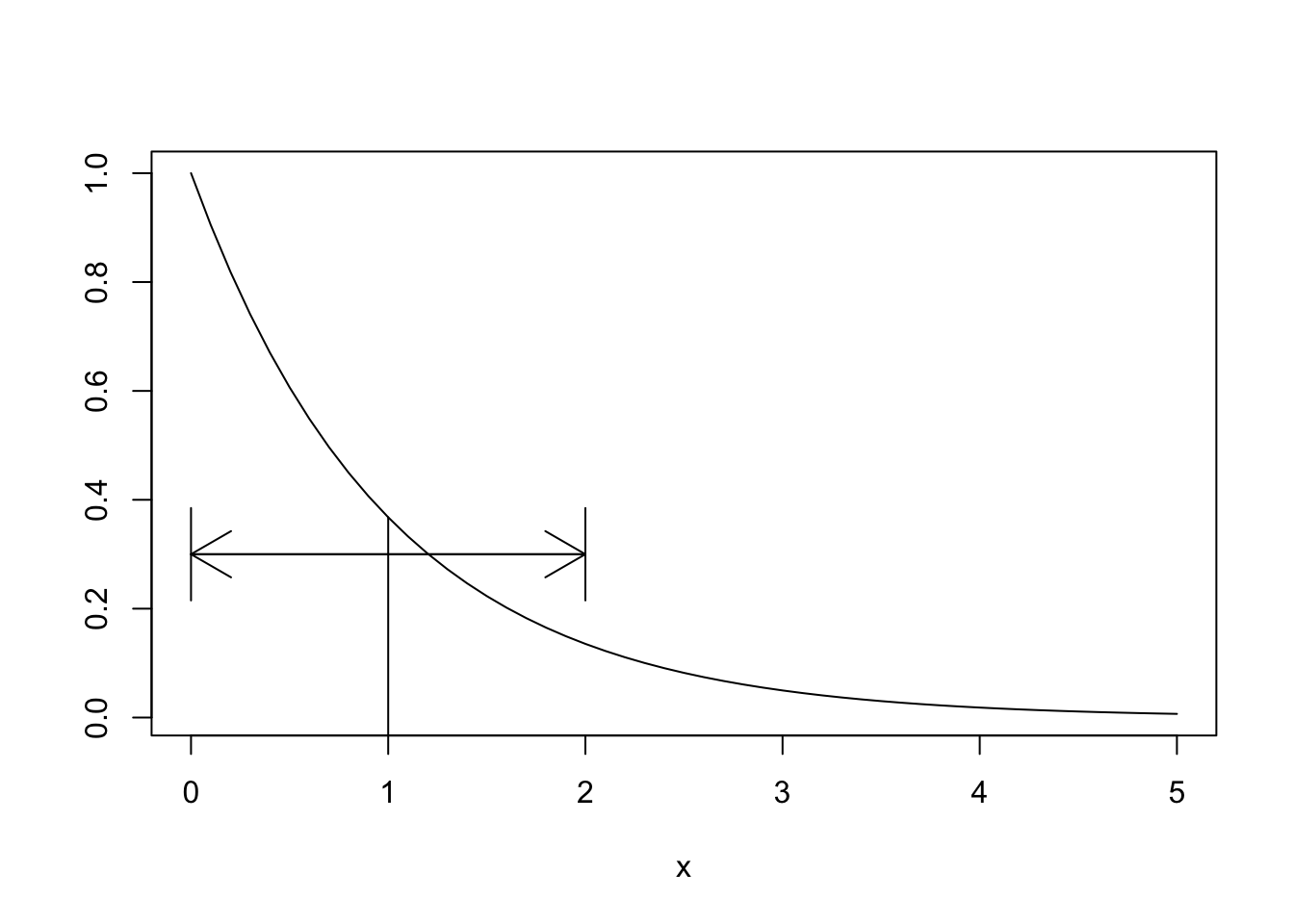

## [1] 0.7570209## [1] 0.8700695Example Compute the variance of \(X\) if the pdf of \(X\) is given by \(f(x) = e^{-x}\), \(x > 0\).

We have already seen that \(E[X] = 1\) and \(E[X^2] = 2\) (Example 3.3). Therefore, the variance of \(X\) is \[ \text{Var}(X) = E[X^2] - E[X]^2 = 2 - 1 = 1. \] The standard deviation \(\sigma(X) = \sqrt{1} = 1\). We interpret of the standard deviation \(\sigma\) as a spread around the mean, as shown in this picture:

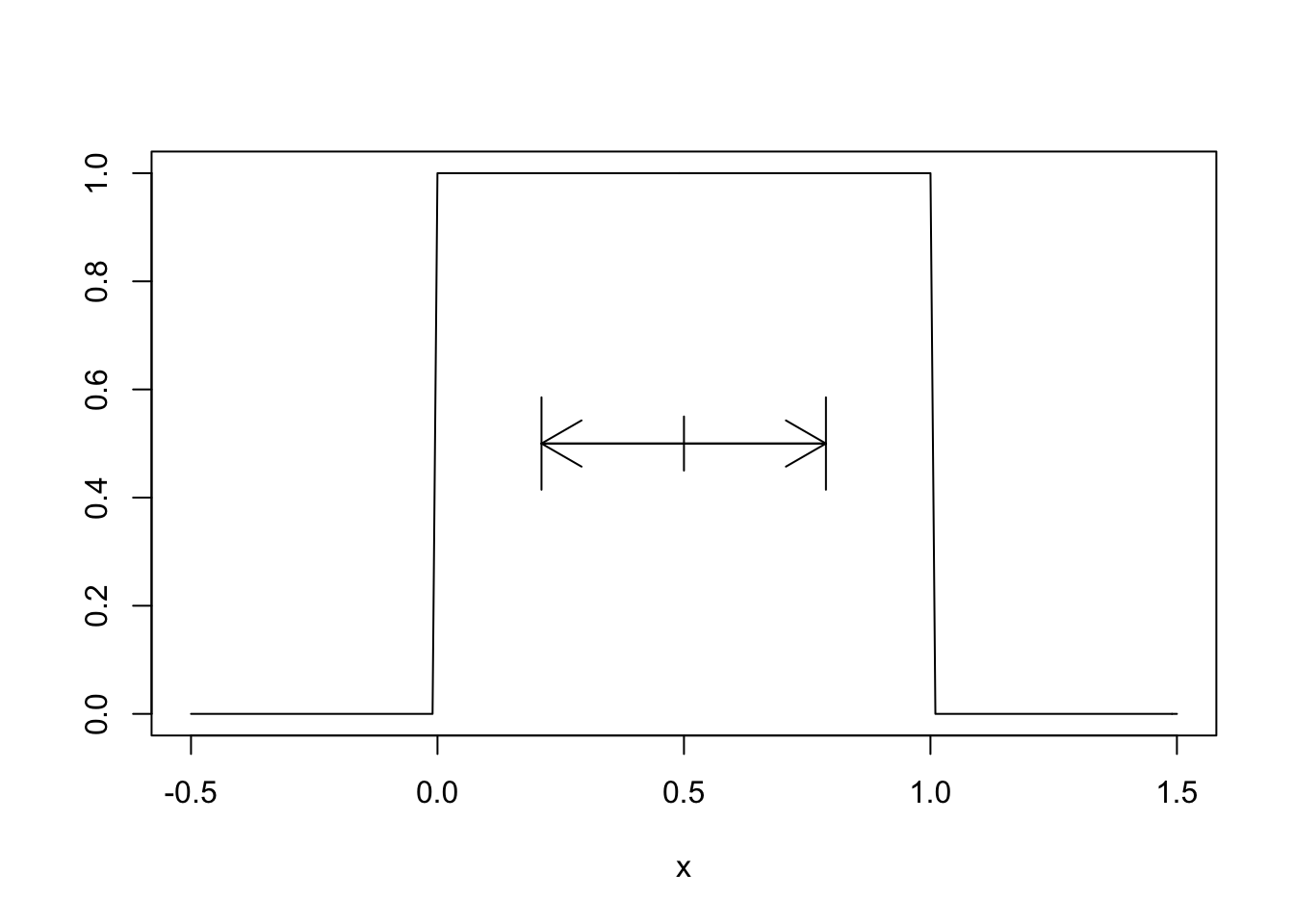

Example Compute the standard deviation of the uniform random variable \(X\) on \([0,1]\). \[ \begin{split} \text{Var}(X) &= E[X^2] - E[X]^2 = \int_0^1x^2 \cdot 1\, dx - \left(\frac{1}{2}\right)^2\\ &= \frac{1}{3} - \frac{1}{4} = \frac{1}{12} \approx 0.083. \end{split} \] So the standard deviation is \(\sigma(X) = \sqrt{1/12} \approx 0.289\). Shown as a spread around the mean of 1/2:

For many distributions, most of the values will lie within one standard deviation of the mean, i.e. within the spread shown in the example pictures. Almost all of the values will lie within 2 standard deviations of the mean. What do we mean by “almost all”? Well, 85% would be almost all. 15% would not be almost all. It is a very vague rule of thumb. If you want something more precise, see Chebychev’s Theorem, which says in particular that the probability of being more than 2 standard deviations away from the mean is at most 25%.

Sometimes, you know that the data you collect will likely fall in a certain range of values. For example, if you are measuring the height in inches of 100 randomly selected adult males, you would be able to guess that your data will very likely lie in the interval 60-84. You can get a rough estimate of the standard deviation by taking the expected range of values and dividing by 6; in this case it would be 24/6 = 4. Here, we are using the heuristic that it is very rare for data to fall more than three standard deviations from the mean. This can be useful as a quick check on your computations.

Unlike expected value, variance and standard deviation are not linear. However, variance and standard deviation do have scaling properties, and variance does distribute over sums in the special case of independent random variables:Theorem 3.10 1. Let \(X\) be a rv and \(c\) a constant. Then \[ \begin{aligned} \text{Var}(cX) &= c^2\text{Var}(X)\\ \sigma(cX) &= c \sigma(X) \end{aligned} \]

- Let \(X\) and \(Y\) be independent random variables. Then \[ {\rm Var}(X + Y) = {\rm Var}(X) + {\rm Var}(Y) \]

Proof. We prove part 1 here, and postpone the proof of part 2. \[\begin{align*} {\rm Var}(cX) =& E[(cX)^2] - E[cX]^2 = c^2E[X^2] - (cE[X])^2\\ =&c^2\bigl(E[X^2] - E[X]^2) = c^2{\rm Var}(X) \end{align*}\]

Example Computing the variance of a binomial random variable. Let \(X \sim \text{Binom}(n, p)\). We have seen that \(X = \sum_{i = 1}^n X_i\), where \(X_i\) are iid Bernoulli random variables. Therefore,

\[\begin{align*} {\text {Var}}(X) &= {\text {Var}}(\sum_{i = 1}^n X_i)\\ &= \sum_{i = 1}^n {\text {Var}}(X_i) \\ &= \sum_{i = 1}^n p(1 - p) = np(1-p) \end{align*}\] where we have used that the variance of a Bernoulli random variable is \(p(1- p)\). Indeed, \(E[X_i^2] -E[X_i]^2 = p - p^2 = p(1 - p)\).

3.7 Normal random variables

The normal distribution is the most important in statistics. It is often referred to as the bell curve, because its shape resembles a bell:

The importance of the normal distribution stems from the Central Limit Theorem, which (very loosely) states that many random variables have normal distributions. A little more accurately, the Central Limit Theorem says that random variables which are affected by many small independent factors are approximately normal.

For example, we might model the heights of adult females with a normal distribution. We imagine that adult height is affected by genetic contributions from generations of parents together with the sum of contributions from food eaten and other environmental factors. This is a reason to try a normal model for heights of adult females, and certainly should not be seen as a theoretical justification of any sort that adult female heights must be normal.

Many measured quantities are commonly modeled with normal distributions. Biometric measurements (height, weight, blood pressure, wingspan) are often nearly normal. Standardized test scores, economic indicators, scientific measurement errors, and variation in manufacturing processes are other examples.

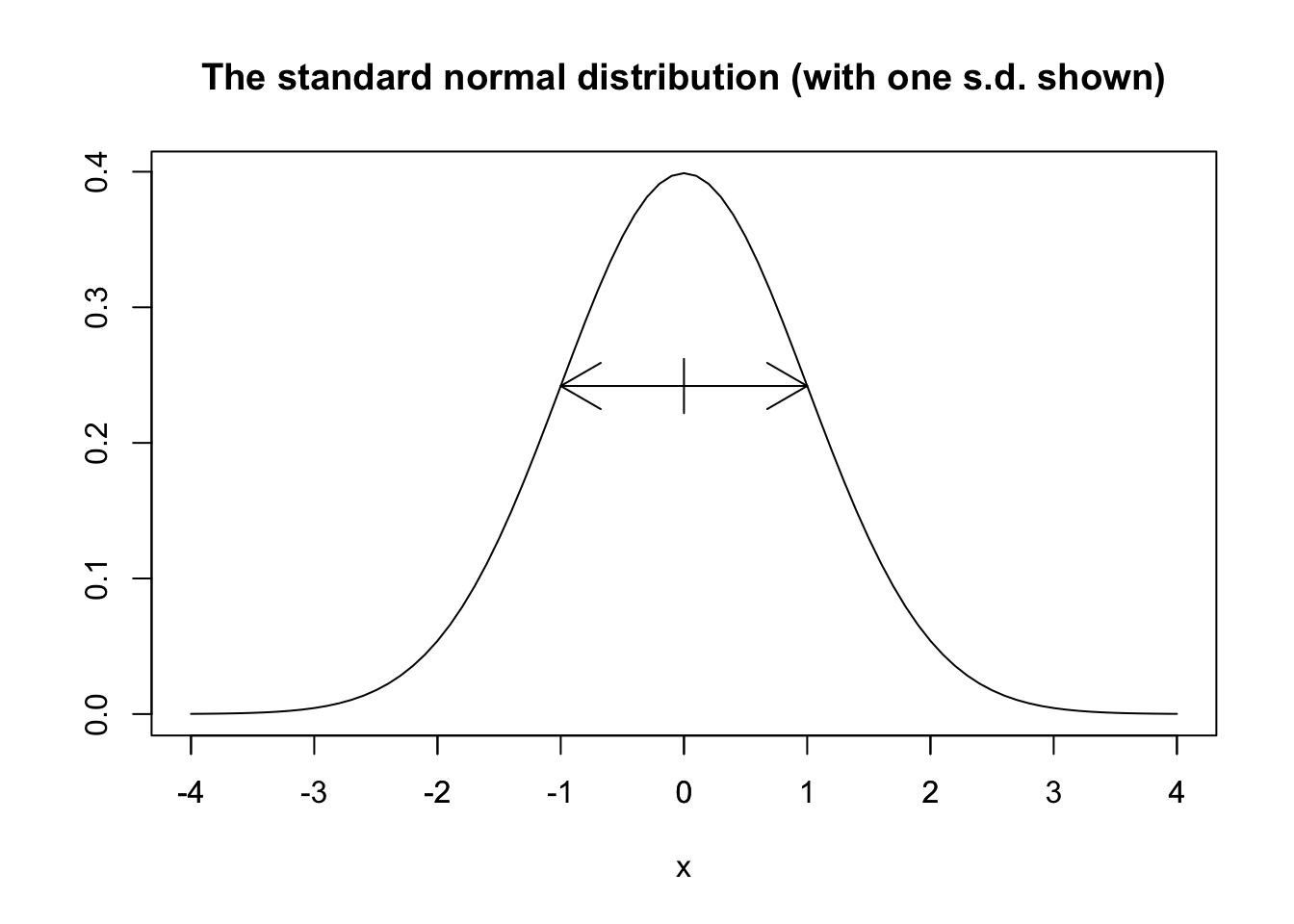

The mathematical definition of the normal distribution begins with the function \(h(x) = e^{-x^2}\), which produces the bell shaped curve shown above, centered at zero and with tails that decay very quickly to zero. By itself, \(h(x) = e^{-x^2}\) is not a distribution since it does not have area 1 underneath the curve. In fact:

\[ \int_{-\infty}^\infty e^{-x^2} dx = \sqrt{\pi} \]

This famous result is known as the Gaussian integral. Its proof is left to the reader in Exercise 38. By rescaling we arrive at an actual pdf given by \(g(x) = \frac{1}{\sqrt{\pi}}e^{-x^2}\). The distribution \(g(x)\) has mean zero and standard deviation \(\frac{1}{\sqrt{2}} \approx 0.707\). The inflection points of \(g(x)\) are also at \(\pm \frac{1}{\sqrt{2}}\) and so rescaling by 2 in the \(x\) direction produces a pdf with standard deviation 1 and inflection points at \(\pm 1\):

The R function pnorm computes the cdf of the normal distribution, as pnorm(x) \(= P(Z \leq x)\). Using this, we can compute the probability that \(Z\) lies within 1, 2, and 3 standard deviations of its mean:

- \(P(-1 \leq Z \leq 1) = P(Z \leq 1) - P(Z \leq -1) =\)

pnorm(1) - pnorm(-1)= 0.6826895. - \(P(-2 \leq Z \leq 2) = P(Z \leq 2) - P(Z \leq -2) =\)

pnorm(2) - pnorm(-2)= 0.9544997. - \(P(-3 \leq Z \leq 3) = P(Z \leq 3) - P(Z \leq -3) =\)

pnorm(3) - pnorm(-3)= 0.9973002.

By shifting and rescaling \(Z\), we define the normal random variable with mean \(\mu\) and standard deviation \(\sigma\):

The parameter names are the mean \(\mu\) and the standard deviation \(\sigma\).

For any normal random variable, approximately 68% of the normal distribution lies within 1 standard deviation of the mean, 95% lies within two standard deviations of the mean, and 99.7% lies within three standard deviations of the mean.

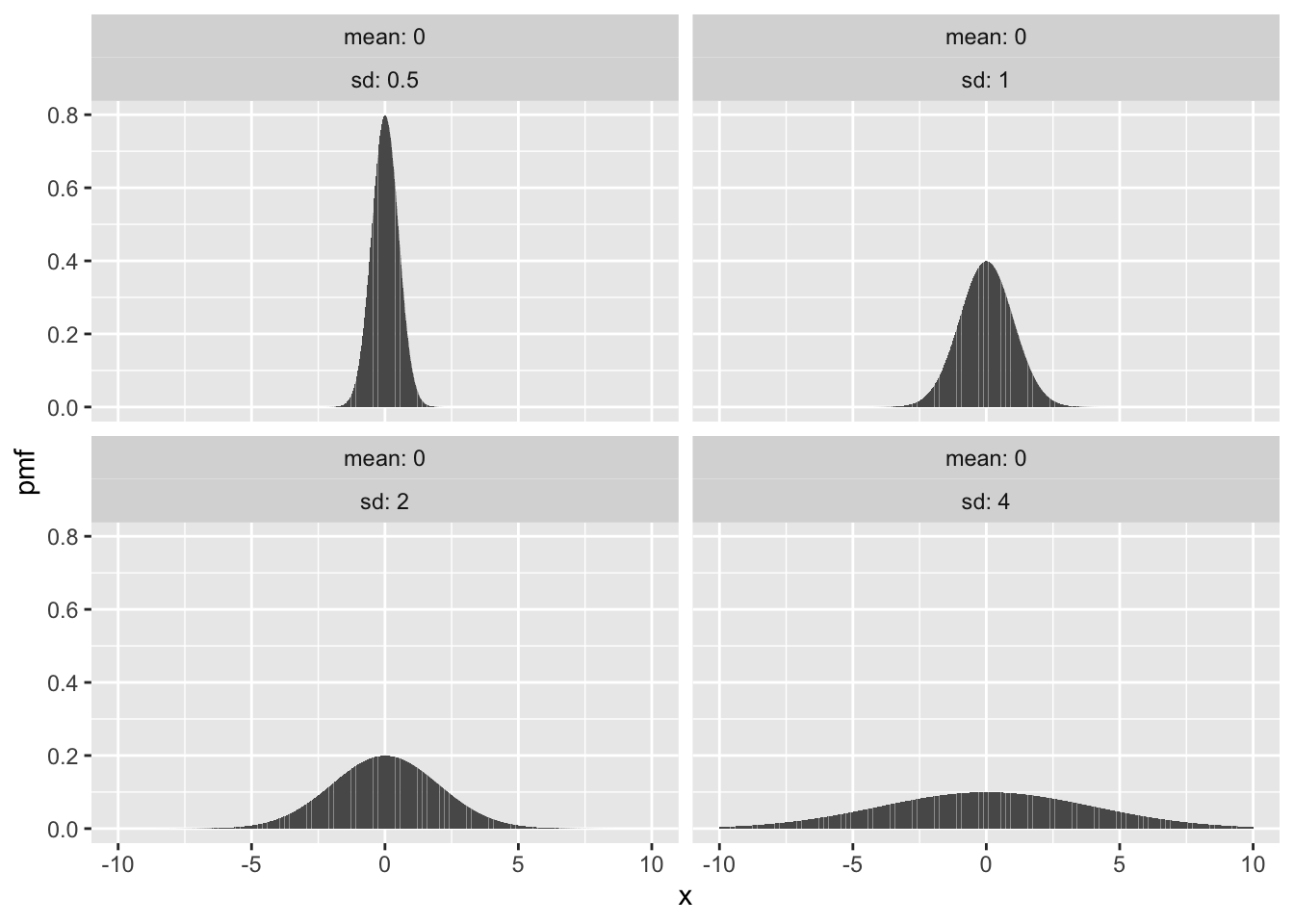

Here are some examples of normal distributions with fixed mean \(\mu = 0\) and various values of the standard deviation \(\sigma\):

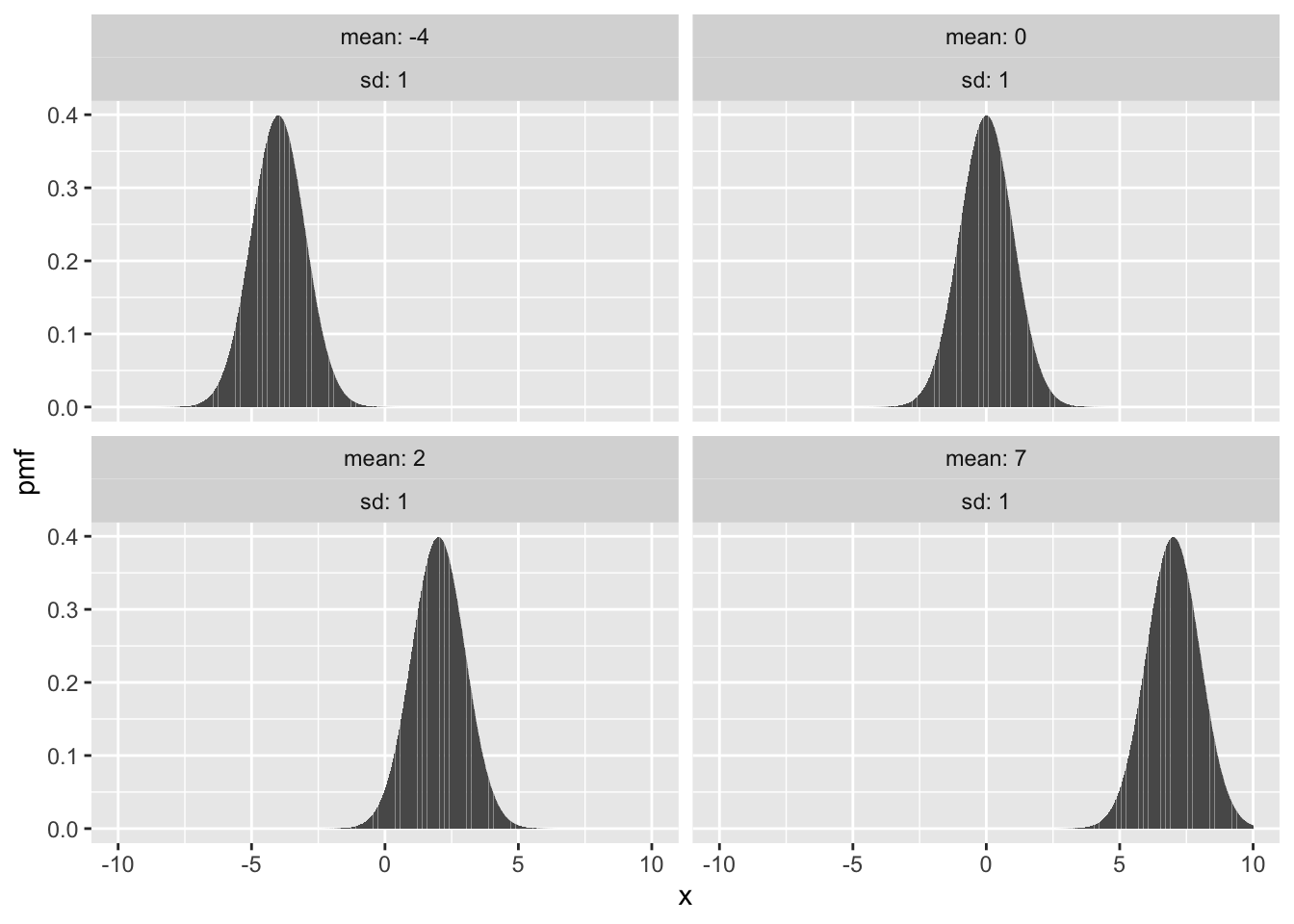

Here are normal distributions with fixed standard deviation \(\sigma = 1\) and various means \(\mu\):

3.7.1 Computations with normal random variables

R has built in functions for working with normal distributions and normal random variables. The root name for these functions is norm, and as with other distributions the prefixes d,p, and r specify the pdf, cdf, or random sampling. If \(X \sim \text{Normal}(\mu,\sigma)\):

dnorm(x,mu,sigma)gives the height of the pdf at \(x\).pnorm(x,mu,sigma)gives \(P(X \leq x)\), the cdf.qnorm(p,mu,sigma)gives the value of \(x\) so that \(P(X \leq x) = p\), which is the inverse cdf.rnorm(N,mu,sigma)simulates \(N\) random values of \(X\).

Here are some simple examples:

Example Let \(X \sim {\rm Normal}(\mu = 3, \sigma = 2)\). Find \(P(X \le 4)\) and \(P(0 \le X \le 5)\).

## [1] 0.6914625## [1] 0.7745375Example

Let \(X \sim {\rm Normal}(100,30)\).

Find the value of \(q\) such that \(P(X \le q) = 0.75\). One approach is to try various choices of \(q\) until discovering that pnorm(120,100,30) is close to 0.75. However, the purpose of the qnorm function is to answer this exact question:

## [1] 120.2347Example The length of dog pregnancies from conception to birth varies according to a distribution that is approximately normal with mean 63 days and standard deviation 2 days.

- What percentage of dog pregnancies last 60 days or less?

- What percentage of dog pregnancies last 67 days or more?

- What range covers the shortest 90% of dog pregnancies?

- What is the narrowest range of times that covers 90% of dog pregnancies?

We let \(X\) be the random variable which is the length of a dog pregnancy. We model \(X \sim \text{Normal}(63,2)\). Then parts a and b ask for \(P(X \leq 60)\) and \(P(X \geq 67)\) and we can compute these with pnorm as follows:

## [1] 0.0668072## [1] 0.02275013For part c, we want \(x\) so that \(P(X \leq x) = 0.90\). This is qnorm(0.90,63,2)=65.6, so 90% of dog pregnancies are shorter than 65.6 days.

For part d, we need to use the fact that the pdf’s of normal random variables are symmetric about their mean, and decreasing away from the mean. So, if we want the shortest interval that contains 90% of dog pregnancies, it should be centered at the mean with 5% of pregnancies to the left of the interval, and 5% of the pregnancies to the right of the interval. We get an interval of the form [qnorm(0.05, 63, 2), qnorm(0.95, 63, 2)], or approximately [59.7, 66.3]. The length of this interval is 6.6, which is much smaller than the length of the interval in the interval computed in c, which was 65.6.

Example Let \(Z\) be a standard normal random variable. Find the mean and standard deviation of the variable \(e^Z\).

We solve this with simulation:

## [1] 1.645643## [1] 2.139868The mean of \(e^Z\) is approximately 1.6, and the standard deviation is approximately 2.1. Note that even with 100000 simulated values these answers are not particularly accurate because on rare occasions \(e^Z\) takes on very large values.

Example Suppose you are picking seven women at random from a university to form a starting line-up in an ultimate frisbee game. Assume that the women’s heights at this university are normally distributed with mean 64.5 inches (5 foot, 4.5 inches) and standard deviation 2.25 inches. What is the probability that 3 or more of the women are 68 inches (5 foot, 8 inches) or taller?

To do this, we first determine the probability that a single randomly selected woman is 68 inches or taller. Let \(X\) be a normal random variable with mean 64 and standard deviation 2.25. We compute \(P(X \ge 68)\) using pnorm:

## [1] 0.09121122Now, we need to compute the probability that 3 or more of the 7 women are 68 inches or taller. Since the population of all women at a university is much larger than 7, the number of women in the starting line-up who are 68 inches or taller is binomial with \(n = 7\) and \(p = 0.09121122\), which we computed in the previous step. We compute the probability that at least 3 are 68 inches as

## [1] 0.02004754So, there is about a 2 percent chance that at least three will be 68 inches or taller. Looking at the 2019 national champion UC San Diego Psychos roster, none of the players listed are 68 inches or taller, which has about a 50-50 chance of occurring according to our model.

Example Throwing a dart at a dartboard with the bullseye at the origin, model the location of the dart with independent coordinates \(X \sim \text{Normal}(0,3)\) and \(Y \sim \text{Normal}(0,3)\) (both in inches). What is the expected distance from the bullseye?

The distance from the bullseye is given by the Euclidean distance formula \(d = \sqrt{X^2+ Y^2}\). We simulate the \(X\) and \(Y\) random variables and then compute the mean of \(d\):

## [1] 3.78152We expect the dart to land about 3.8 inches from the bullseye, on average.

3.7.2 Normal approximation to the binomial

The value of a binomial random variable is the sum of many small, independent factors: the Bernoulli trials. A special case of the Central Limit Theorem is that a binomial random variable can be well approximated by a normal random variable.

First, we need to understand the standard deviation of a binomial random variable.

The proof of this theorem was given as an example in Section 3.6. There is also an instructive proof that is similar to the proof of Theorem 3.3, except that we take the derivative of the binomial theorem two times and compute \(E[X(X-1)]\). The result follows from \(E[X^2] = E[X(X-1)] + E[X]\) and Theorem 3.9.

Now the binomial rv \(X\) can be approximated by a random normal variable with the same mean and standard deviation as \(X\):

The size of \(n\) required to make the normal approximation accurate depends on the accuracy required and also depends on \(p\). Binomial distributions with \(p\) close to 0 or 1 are not as well approximated by the normal distribution as those with \(p\) near 1/2.

This normal approximation was traditionally used to work with binomial random variables, since calculating the binomial distribution exactly requires quite a bit of computation. Probabilities for the normal distribution were readily available in tables, and so easier to use. With R, the pbinom function makes it easy to work with binomial pmfs directly.

Example Let \(X \sim \text{Binom}(300,0.46)\). Compute \(P(X > 150)\).

Computing exactly, \(P(X > 150) =\) 1 - pbinom(150,300,0.46) = 0.0740.

To use the normal approximation, we calculate that \(X\) has mean \(300 \cdot 0.46 = 138\) and standard deviation

\(\sqrt{300\cdot0.46\cdot0.54} \approx 8.63\). Then \(P(X > 150) \approx\) 1 - pnorm(150,138,8.63) = 0.0822.

As an improvement, notice that the continuous normal variable can take values in between 150 and 151, but the discrete binomial variable cannot. To account for this, we use a continuity correction and assign each integer value of the binomial variable to the one-unit wide interval centered at that integer. Then 150 corresponds to the interval (145.5,150.5) and 151 corresponds to the interval (150.5,151.5). To approximate \(X > 150\), we

want our normal random variable to be larger than 150.5. The normal approximation with continuity correction gives

\(P(X > 150) \approx\) 1 - pnorm(150.5,138,8.63) = 0.0737, much closer to the actual value of 0.0740.

3.8 Other special random variables

In this section, we discuss other commonly occurring special types of random variable. Two of these (the uniform and exponential) have already shown up as earlier examples of continuous distributions.

3.8.1 Poisson and exponential random variables

A Poisson process models events that happen at random times. For example, radioactive decay is a Poisson process, where each emission of a radioactive particle is an event. Other examples modeled by Poisson process are meteor strikes on the surface of the moon, customers arriving at a store, hits on a web page, and car accidents on a given stretch of highway.

In this section, we will discuss two natural random variables attached to a Poisson process. The Poisson random variable is discrete, and can be used to model the number of events that happen in a fixed time period. The exponential random variable is continuous, and models the length of time for the next event to occur.

We begin by defining a Poisson process. Suppose events occur spread over time. If the occurrences of the event satisfy the following properties, then the events form a Poisson process:

- The probability of an event occurring in a time interval \([a,b]\) depends only on the length of the interval \([a,b]\).

- If \([a,b]\) and \([c,d]\) are disjoint time intervals, then the probability that an event occurs in \([a,b]\) is independent of whether an event occurs in \([c,d]\). (That is, knowing that an event occurred in \([a,b]\) does not change the probability that an event occurs in \([c,d]\).)

- Two events cannot happen at the same time. (Formally, we need to say something about the probability that two or more events happens in the interval \([a, a + h]\) as \(h\to 0\).)

- The probability of an event occurring in a time interval \([a,a + h]\) is roughly \(\lambda h\), for some constant \(\lambda\).

Property (4) says that events occur at a certain rate, which is denoted by \(\lambda\).

3.8.1.1 Poisson

We will not prove this theorem (indeed, we haven’t stated condition 4 in the definition of Poisson process precisely enough to even do so), but we do provide a heuristic. Suppose we break up the time interval from 0 to 1 into \(n\) pieces of equal length. Let \(X_i\) be the number of events that happen in the \(i\)th interval. When \(n\) is big, \((X_i)_{i = 1}^n\) is approximately a sequence of \(n\) Bernoulli trials, each with probability of success \(\lambda/n\). Therefore, if we let \(Y_n\) be a binomial random variable with \(n\) trials and probability of success \(\lambda/n\), we have:

\[\begin{align*} P(X = x) &= P(\sum_{i = 1}^n X_i = x)\\ &\approx P(Y_n = x)\\ &= {\binom{n}{x}} (\lambda/n)^x (1 - \lambda/n)^{(n - x)} \\ &\approx \frac{n^x}{x!} \frac{1}{n^x} \lambda^x (1 - \lambda/n)^n\\ &\to \frac{\lambda^x}{x!}{e^{-\lambda}} \qquad {\text {as}} n\to \infty \end{align*}\]

The key to defining the Poisson process formally correctly is to ensure that the above computation is mathematically justified.

We leave the proof that \(\sum_x p(x) = 1\) as Exercise 39, along with the proof of the following Theorem.

Though the proof of the mean and variance of a Poisson is an exercise, we can also give a heuristic argument. From above, a Poisson with rate \(\lambda\) is approximately a Binomial rv \(Y_n\) with \(n\) trials and probability of success \(\lambda/n\) when \(n\) is large. The mean of \(Y_n\) is \(n \times \lambda/n = \lambda\), and the variance is \(n (\lambda/n)(1 - \lambda/n) \to \lambda\) as \(n\to\infty\).

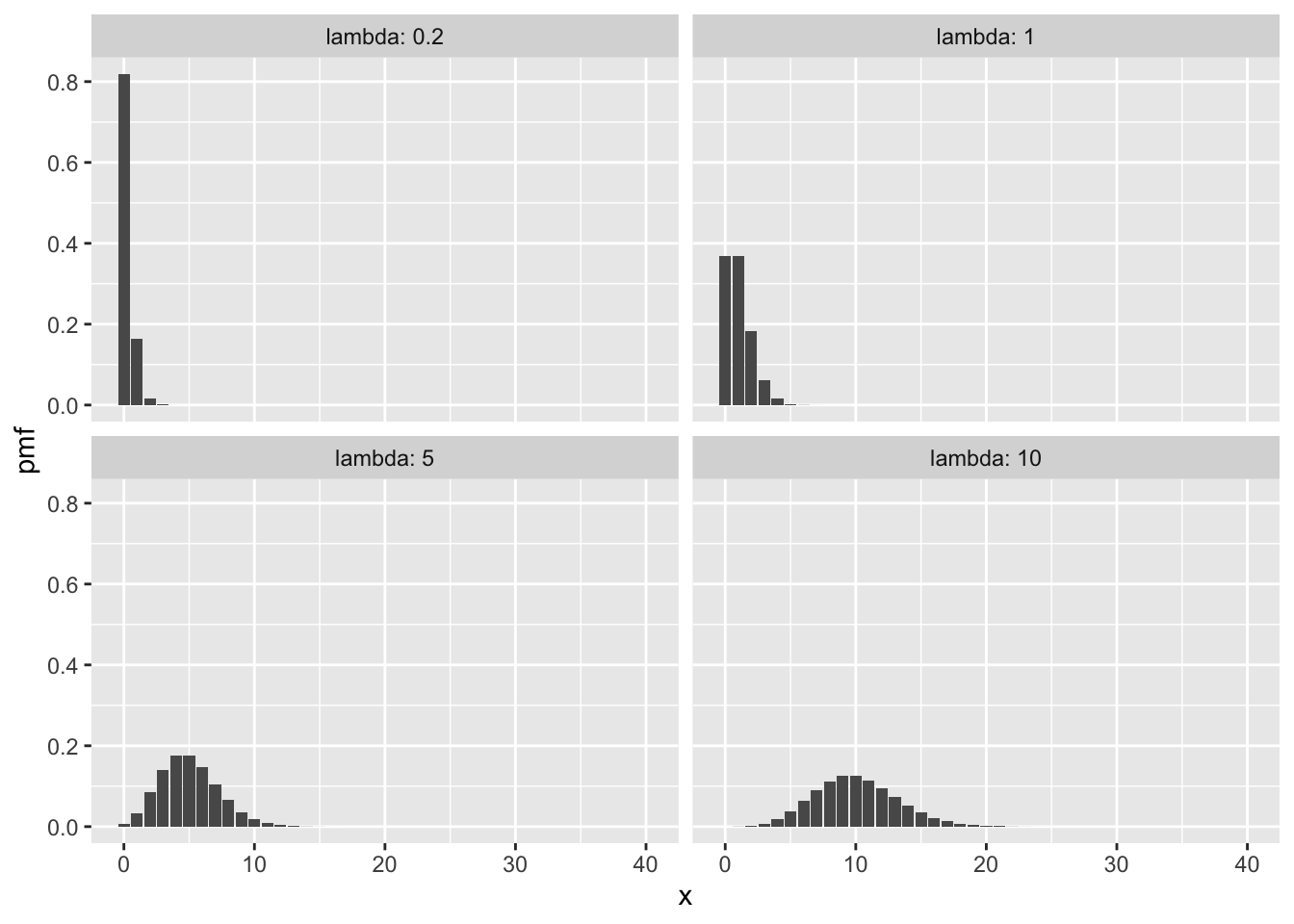

Here some plots of Poisson pmfs with various means \(\lambda\).

Observe in the plots that larger values of \(\lambda\) correspond to more spread-out distributions, since the standard deviation of a Poisson rv is \(\sqrt{\lambda}\). Also, for larger values of \(\lambda\), the Poisson distribution becomes approximately normal.

Example The Taurids meteor shower is visible on clear nights in the Fall and can have visible meteor rates around five per hour. What is the probability that a viewer will observe exactly eight meteors in two hours?

We let \(X\) be the number of observed meteors in two hours, and model \(X \sim \text{Pois}(10)\), since we expect \(\lambda = 10\) meteors in our two hour time period. Computing exactly, \[ P(X = 8) = \frac{10^8}{8!}e^{-10} \approx 0.1126.\]

Using R, and the pdf dpois:

## [1] 0.112599Or, by simulation with rpois:

## [1] 0.1191We find that the probability of seeing exactly 8 meteors is about 0.11.

Example Suppose a typist makes typos at a rate of 3 typos per 10 pages. What is the probability that they will make at most one typo on a five page document?

We let \(X\) be the number of typos in a five page document. Assume that typos follow the properties of a Poisson rv. It is not clear that they follow it exactly. For example, if the typist has just made a mistake, it is possible that their fingers are no longer on home position, which means another mistake is likely soon after. This would violate the independence property (2) of a Poisson process. Nevertheless, modeling \(X\) as a Poisson rv is reasonable.

The rate at which typos occur per five pages is 1.5, so we use \(\lambda = 1.5\). Then we can compute \(P(X \leq 1) = \text{ppois}(1,1.5)=0.5578\). The typist has a 55.78% chance of making at most one typo on a five page document.

3.8.1.2 Exponential

An exponential random variable \(X\) with rate \(\lambda\) has pdf \[ f(x) = \lambda e^{-\lambda x}, \qquad x > 0 \]

Exponential rv’s measure the waiting time until the first event occurs in a Poisson process . The waiting time until an electronic component fails could be exponential. In a store, the time between customers could be modeled by an exponential random variable by starting the Poisson process at the moment the first customer enters.

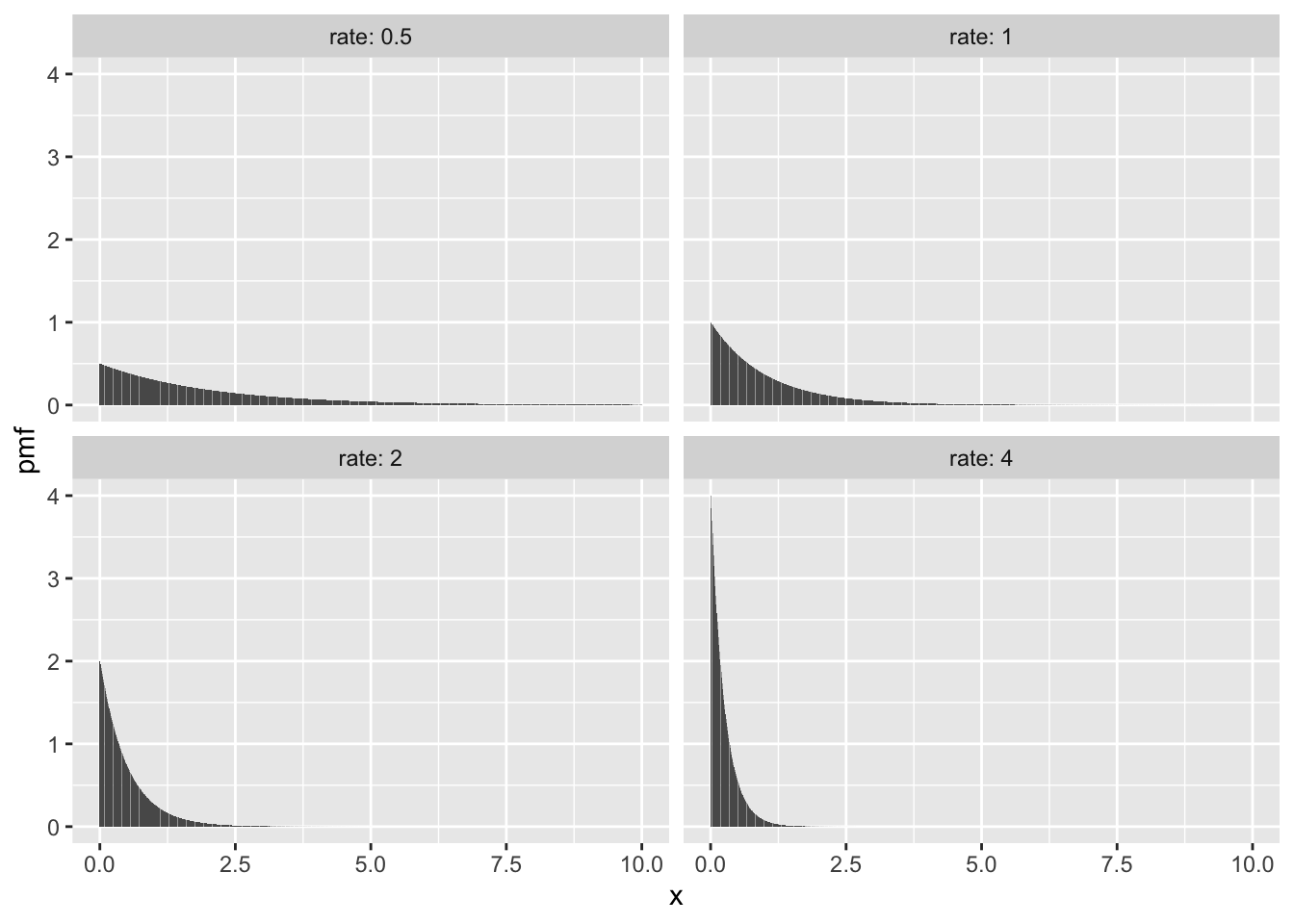

Here are some plots of pdf’s with various rates.

Observe from the pictures that the higher the rate, the smaller \(X\) will be. This is because we generally wait less time for events that occur more frequently.

We compute the mean of an exponential random variable with rate \(\lambda\) using integration by parts as follows: \[\begin{align*} E[X]&=\int_{-\infty}^\infty x f(x)\, dx\\ &=\int_0^\infty x \lambda e^{-\lambda x} + \int_{-\infty}^0 x \cdot 0 \, dx\\ &= -xe^{-\lambda x} - \frac {1}{\lambda} e^{-\lambda x}\big|_{x = 0}^{x = \infty}\\ & = \frac {1}{\lambda} \end{align*}\]

For the variance, we compute the rather challenging integral: \[ E[X^2] = \int_0^\infty x^2 \lambda e^{-\lambda x} dx = \frac{2}{\lambda^2} \] Then \[ \text{var}(X) = E[X^2] - E[X]^2 = \frac{2}{\lambda^2} - \left(\frac{1}{\lambda}\right)^2 = \frac{1}{\lambda^2}. \]

Example When watching the Taurids meteor shower, meteors arrive at a rate of five per hour. How long do you expect to wait for the first meteor? How long should you wait to have a 95% change of seeing a meteor?

Here, \(X\) is the length of time before the first meteor. We model the meteors as a Poisson process with rate \(\lambda = 5\) (and time in hours). Then \(E[X] = \frac{1}{5} = 0.2\) hours, or 12 minutes.

For a 95% chance, we are interested in finding \(x\) so that \(P(X < x) = 0.95\). One way to approach this is by playing with values of \(x\) in the R function pexp(x,5). Some effort will yield pexp(0.6,5) = 0.95, so that we should plan on waiting 0.6 hours, or 36 minutes to be 95% sure of seeing a meteor.

A more straightforward approach is to use the inverse cdf function qexp, which gives

## [1] 0.5991465and the exact waiting time of 0.599 hours.

Example The memorlyess property of exponential random variables is the equation \[ P(X > s + t|X > s) = P(X > t) \] for any \(s,t > 0\). It helps to interpret this equation in the context of a Poisson process, where \(X\) measures waiting time for some event. The left hand side of the equation is the probability that we wait \(t\) longer, given that we have already waited \(s\). The right hand side is the probability that we wait \(t\), from the beginning. Because these two probabilities are the same, it means that waiting \(s\) has gotten us no closer to the occurrence of the event. The Poisson process has no memory that you have “already waited” \(s\).

We prove the memoryless property here by computing the probabilities involved. The cdf of an exponential random variable with rate \(\lambda\) is given by \[ F(x) = \int_{0}^\infty e^{-\lambda x} dx = 1 - e^{-\lambda x} \] for \(x > 0\). Then \(P(X > x) = 1 - F(x) = e^{-\lambda x}\), and \[\begin{align*} P(X > s + t|X > s) &= \frac{P(X > s + t \cap X > s)}{P(X > s)} \\ &=\frac{P(X > s + t)}{P(X > s)}\\ &=e^{-\lambda(s + t)}/e^{-\lambda s}\\ &=e^{-\lambda t}\\ &=P(X > t) \end{align*}\]

3.8.2 Uniform random variables

Uniform random variables may be discrete or continuous.

A discrete uniform variable may take any one of finitely many values, all equally likely. The classic example is the die roll, which is uniform on the numbers 1,2,3,4,5,6. Another example is a coin flip, where we assign 1 to heads and 0 to tails. Unlike most other named random variables, R has no special functions for working with discrete uniform variables. Instead, we use sample to simulate these.

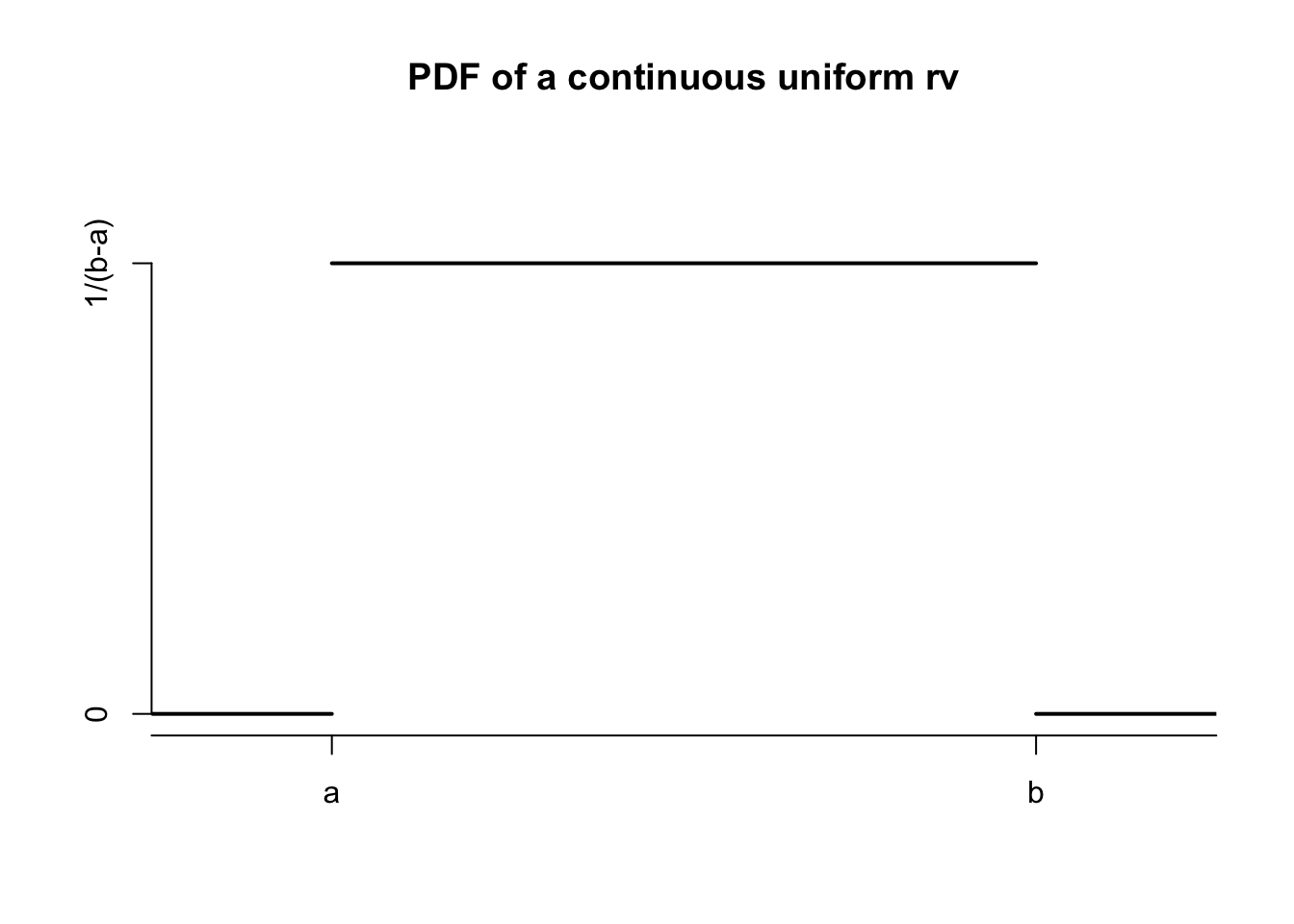

We write \(X \sim \text{Unif}(a,b)\) if \(X\) is continuous uniform on the interval \([a,b]\). The rv \(X\) is characterized by the property that for any interval \(I \subset [a,b]\), the probability \(P(X \in I)\) depends only on the length of \(I\).

The pdf of \(X\) is given by \[ f(x) = \begin{cases} \frac{1}{b -a} & a \le x \le b\\0&{\rm otherwise} \end{cases} \]

One example of a random variable that could be modeled by a uniform random variable is round-off error in measurements. Say we measure height and record only feet and inches. It is a reasonable first approximation that the error associated with rounding to the nearest inch is uniform on the interval \([-1/2,1/2]\). There may be other sources of measurement error which might not be well modeled by a uniform random variable, but the round-off is uniform.

Another example is related to the Poisson process. If you observe a Poisson process after some length of time \(T\) and see that exactly one event has occurred, then the time that the event occurred in the interval \([0, T]\) is uniformly distributed.

Example A random real number is chosen uniformly in the interval 0 to 10. What is the probability that it is bigger than 7, given that it is bigger than 6?

Let \(X \sim \text{Unif}(0,10)\) be the random real number. Then \[ P(X > 7\ |\ X > 6) = \frac{P(X > 7 \cap X > 6)}{P(X > 6)} = \frac{P(X > 7)}{P(X > 6)} =\frac{3/10}{4/10} = \frac{3}{4}. \]

Alternately, we can compute with the punif function, which gives the cdf of a uniform random variable.

## [1] 0.75It turns out that the conditional density of a uniform over the interval \([a,b]\) given that it is in the subset \([c,d]\) is uniformly distributed on the interval \([c,d]\). Applying that fact to the above example, we know that \(X\) given \(X > 6\) is uniform on the interval \([6, 10]\). Therefore, the probability that it is larger than 7 is just 3/4. Note that this only works for uniform random variables! For other random variables, you will need to reason as in the example.

We finish this section with a computation of the mean and variance of a uniform random variable \(X\). Not surprisingly, the mean is exactly halfway along the interval of possible values for \(X\).

We compute the mean of \(X\) as follows: \[\begin{align*} E[X]&= \int_a^b \frac{x}{b-a}\, dx\\ &=\frac{x^2}{2(b - a)}|_{x = a}^{x = b}\\ &=\frac{(b - a)(b + a)}{2(b - a)}\\ &=\frac{a+b}{2}. \end{align*}\]

For the variance, first calculate \(E[X^2] = \int_a^b \frac{x^2}{b-a}dx\). Then \[ \text{var}(X) = E[X^2] - E[X]^2 = E[X^2] - \left(\frac{a+b}{2}\right)^2. \] Working the integral and simplifying \(\text{var}(X)\) is left as Exercise 25.

3.8.3 Negative binomial

Example Suppose you repeatedly roll a fair die. What is the probability of getting exactly 14 non-sixes before getting your second 6?

As you can see, this is an example of repeated Bernoulli trials with \(p = 1/6\), but it isn’t exactly geometric because we are waiting for the second success. This is an example of a negative binomial random variable.

More generally, suppose that we observe a sequence of Bernoulli trials with probability of success prob. If \(X\) denotes the number of failures x before the nth success, then \(X\) is a negative binomial random variable with parameters n and p. The probability mass function of \(X\) is given by

\[ p(x) = \binom{x + n - 1}{x} p^n (1 - p)^x, \qquad x = 0,1,2\ldots \]

The mean of a negative binomial is \(n p/(1 - p)\), and the variance is \(n p /(1 - p)^2\). The root R function to use with negative binomials is nbinom, so dnbinom is how we can compute values in R. The function dnbinom uses prob for \(p\) and size for \(n\) in our formula. So, to continue with the example above, the probability of obtaining exactly 14 non-sixes before obtaining 2 sixes is:

## [1] 0.03245274Note that when size = 1, negative binomial is exactly a geometric random variable, e.g.

## [1] 0.01298109## [1] 0.012981093.8.4 Hypergeometric

Consider the experiment which consists of sampling without replacement from a population that is partitioned into two subgroups - one subgroup is labeled a “success” and one subgroup is labeled a “failure”. The random variable that counts the number of successes in the sample is an example of a hypergeometric random variable.

To make things concrete, we suppose that we have \(m\) successes and \(n\) failures. We take a sample of size \(k\) (without replacement) and we let \(X\) denote the number of successes. Then \[ P(X = x) = \frac{\binom{m}{x} {\binom{n}{k - x}} }{\binom{m + n}{k}} \] We also have \[ E[X] = k (\frac{m}{m + n}) \] which is easy to remember because \(k\) is the number of samples taken, and the probability of a success on any one sample (with no knowledge of the other samples) is \(\frac {m}{m + n}\). The variance is similar to the variance of a binomial as well, \[ V(X) = k \frac{m}{m+n} \frac {n}{m+n} \frac {m + n - k}{m + n - 1} \] but we have the “fudge factor” of \(\frac {m + n - k}{m + n - 1}\), which means the variance of a hypergeometric is less than that of a binomial. In particular, when \(m + n = k\), the variance of \(X\) is 0. Why?

When \(m + n\) is much larger than \(k\), we will approximate a hypergeometric random variable with a binomial random variable with parameters \(n = m + n\) and \(p = \frac {m}{m + n}\). Finally, the R root for hypergeometric computations is hyper. In particular, we have the following example:

Example 15 US citizens and 20 non-US citizens pass through a security line at an airport. Ten are randomly selected for further screening. What is the probability that 2 or fewer of the selected passengers are US citizens?

In this case, \(m = 15\), \(n = 20\), and \(k = 10\). We are looking for \(P(X \le 2)\), so

## [1] 0.086779923.9 Independent random variables

We say that two random variables, \(X\) and \(Y\), are independent if knowledge of the outcome of \(X\) does not give probabilistic information about the outcome of \(Y\) and vice versa. As an example, let \(X\) be the amount of rain (in inches) recorded at Lambert Airport on a randomly selected day in 2017, and let \(Y\) be the height of a randomly selected person in Botswana. It is difficult to imagine that knowing the value of one of these random variables could give information about the other one, and it is reasonable to assume that the rvs are independent. On the other hand, if \(X\) and \(Y\) are the height and weight of a randomly selected person in Botswana, then knowledge of one variable could well give probabilistic information about the other. For example, if you know a person is 72 inches tall, it is unlikely that they weigh 100 pounds.

We would like to formalize that notion with conditional probability. The natural statement is that for any \(E, F\) are subsets of \({\mathbb R}\), the conditional probability \(P(X\in E|Y\in F)\) is equal to \(P(X \in E)\). There are several issues with formalizing the notion of independence that way, so we give a definition that is somewhat further removed from the intuition.

The definition mirrors the multiplication rule for independent events.

Definition 3.8 Suppose \(X\) and \(Y\) are random variables:

\(X\) and \(Y\) are independent \(\iff\) For all \(x\) and \(y\), \(P(X \le x, Y \le y) = P(X \le x) P(Y \le y)\).For discrete random variables, this is equivalent to saying that \(P(X = x, Y = y) = P(X = x)P(Y = y)\) for all \(x\) and \(y\).

For our purposes, we will often be assuming that random variables are independent.

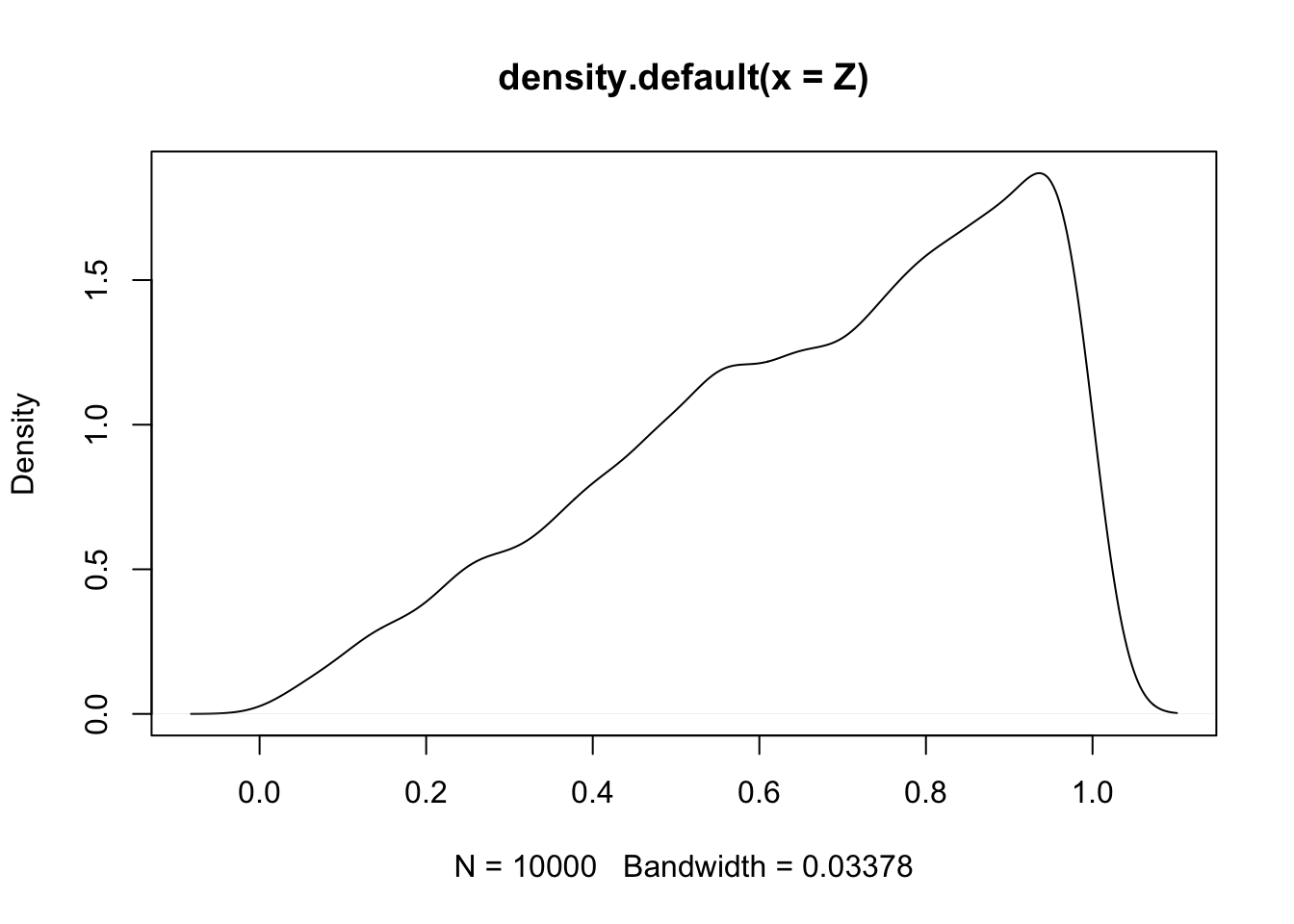

Example Let \(X\) and \(Y\) be uniform random variables on the interval \([0,1]\). Find the cumulative distribution function for the random variable \(Z\) which is the larger of \(X\) and \(Y\).

Let’s start with an observation. Note that \(Z \le z\) exactly when both \(X \le z\) and \(Y \le z\). Therefore, we can rewrite \(F_Z(z) = P(Z \le z) = P(X \le z, Y \le z) = P(X \le z)P(Y \le z)\), where the last equality uses the independence of \(X\) and \(Y\). Since the cdf of a uniform is given by \(F(x) = \begin{cases} 0&x < 0\\x&0\le x \le 1\\1&x > 1\end{cases}\), we obtain the solution of \[ F_Z(z) = \begin{cases}0& z < 0\\z^2&0\le z \le 1\\1&z > 1\end{cases} \]

The pdf of \(Z\) we compute by differentiating \(F_Z(z)\) to get: \[ f_Z(z) = \begin{cases}0& z < 0\\2z&0\le z \le 0\\1&z > 1\end{cases} \]

We can check this by simulation by generating \(X\), \(Y\), and then the maximum of \(X\) and \(Y\). Note that the R function max gives the largest single maximum value of its input. Here, we use pmax which gives the maximum of each pair of elements in the two given vectors.

Observe that the distribution of \(Z\) values follows the theoretical pdf function.

Later in this text, we will need to assume that many random variables are independent. We will not make use of the precise mathematical definition later, but for completeness we include it here:3.10 Summary

This chapter introduced the notion of a random variable, and the associated notion of a probability distribution. For any random variable, we might be interested in answering probability questions either exactly or through simulation. Usually, these questions involve knowledge of the probability distribution. For some commonly occurring types of random variable, the probability distribution functions are well understood.

Here is a list of the random variables that we introduced in this section, together with pmf/pdf, expected value, variance and root R function.

| RV | PMF/PDF | Range | Mean | Variance | R Root |

|---|---|---|---|---|---|

| Binomial | \({\binom{n}{x}} p^x(1 - p)^{n - x}\) | \(0\le x \le n\) | \(np\) | \(np(1 - p)\) | binom |

| Geometric | \(p(1-p)^{x}\) | \(x \ge 0\) | \(\frac{1-p}{p}\) | \(\frac{1-p}{p^2}\) | geom |

| Poisson | \(\frac {1}{x!} \lambda^x e^{-\lambda}\) | \(x \ge 0\) | \(\lambda\) | \(\lambda\) | pois |

| Uniform | \(\frac{1}{b - a}\) | \(a \le x \le b\) | \(\frac{a + b}{2}\) | \(\frac{(b - a)^2}{12}\) | unif |

| Exponential | \(\lambda e^{-\lambda x}\) | \(x \ge 0\) | \(1/\lambda\) | \(1/\lambda^2\) | exp |

| Normal | \(\frac 1{\sigma\sqrt{2\pi}} e^{(x - \mu)^2/(2\sigma^2)}\) | \(-\infty < x < \infty\) | \(\mu\) | \(\sigma^2\) | norm |

| Hypergeometric | \(\frac{{\binom{m}{x}} {\binom{n}{k-x}}}{{\binom{m+n}{k}}}\) | \(x = 0,\ldots,k\) | \(kp\) | \(kp(1-p)\frac{m + n - k}{m + n - 1}\) | hyper |

| Negative Binomial | \(\binom{x + n - 1}{x} p^n (1 - p)^x\) | \(x \ge 0\) | \(n\frac{1-p}p\) | \(n\frac{1-p}{p^2}\) | nbinom |

When modeling a count of something, you often need to choose between binomial, geometric, and Poisson. The binomial and geometric random variables both come from Bernoulli trials, where there is a sequence of individual trials each resulting in success or failure. In the Poisson process, events are spread over a time interval, and appear at random.

The normal random variable is a good starting point for continuous measurements that have a central value and become less common away from that mean. Exponential variables show up when waiting for events to occur. Continuous uniform variables sometimes occur as the location of an event in time or space, when the event is known to have happened on some fixed interval.

R provides these random variables (and many more!) through a set of four functions for each known distribution.

The four functions are determined by a prefix, which can be p, d, r, or q. The root determines which distribution we are talking about.

Each distribution function takes a single argument first, determined by the prefix, and then some number of parameters, determined by the root. The general form of a distribution function in R is:

[prefix][root] ( argument, parameter1, parameter2, ..)

The available prefixes are:

p: compute the cumulative distribution function \(P(X < x)\), and the argument is \(x\).d: compute the pdf or pmf \(f\). The value is \(f(x)\), and the argument is \(x\). In the discrete case, this is the probability $P(X = x).r: sample from the rv. The argument is \(N\), the number of samples to take.q: quantile function, the inverse cdf. This computes \(x\) so that \(P(X < x) = q\), and the argument is \(q\).

The distributions we have used in this chapter, with their parameters are:

binomis binomial, parameters are \(n\),\(p\).geomis geometric, parameter is \(p\).normis normal, parameters are \(\mu, \sigma\).poisis Poisson, parameter is \(\lambda\).expis exponential, parameter is \(\lambda\).unifis uniform, parameters are \(a,b\)

There will be many more distributions to come, and the four prefixes work the same way for all of them.

Vignette: An R Markdown Primer

RStudio has included a method for making higher quality documents from a combination of R code and some basic markdown formatting, which is called R Markdown. For students reading this book, writing your homework in RMarkdown will allow you to have reproducible results. It is much more efficient than creating plots in R and copy/pasting into Word or some other document. Professionals may want to write reports in RMarkdown. This chapter gives the basics, with a leaning toward homework assignments.

In order to create an R Markdown file, inside RStudio you click on File, then New File and R Markdown. You will be prompted to enter a Title and an Author. For now, leave the Default Output Format as .html and leave the Document icon highlighted. When you have entered an acceptable title and author, click OK and a new tab in the Source pane should open up. (That is the top left pane in RStudio.) The very first thing that we would do is click on the Knit button right above the source panel. It should knit the document and give you a nice-ish looking document. Our goal in this vignette is to get you started making nicer looking homework and other documents in RMarkdown. For further information, see Xie, Allaire and Grolemund’s book9

You should see something that looks like this, with some more things underneath.

---

title: “Untitled”

author: “Darrin Speegle”

date: “1/20/2021”

output: html_document

---

This part of the code is the YAML (YAML Ain’t Markup Language). Next is a bit of code that starts like this. You don’t have to change that, but you might experiment with the tufte style. You will need to install the tufte package in order to do this, and change your YAML to look like this.

---

title: "Homework 1"

author: "Darrin Speegle"

date: "1/20/2021"

output:

tufte::tufte_handout: default

tufte::tufte_html: default

---

```{r setup, include=FALSE}

knitr::opts_chunk$set(echo = TRUE,

message = FALSE,

warning = FALSE,

fig.width = 4,

fig.height = 4,

fig.margin = TRUE)

```One benefit of using the tufte package is that in order for the document to look good, it requires you to write more text than you might otherwise. Many beginners tend to have too much R code and output (including plots), and not enough words. If you use the tufte package, you will see that the document doesn’t look right unless the proportion of words to plots and other elements is better.

Note that we have changed the title of the homework, and the default values for several things in the file. That is, we changed knitr::opts_chunk$set(echo = TRUE) to read knitr::opts_chunk$set(echo = TRUE, message = FALSE, warning = FALSE, fid.width = 4, fig.height = 4, fig.margin = TRUE). You should experiment with these options to find the set-up that works best for you. If you are preparing a document for presentation, for example, you would most likely want to have echo = FALSE.

Once you have made those changes to your own file, click on the Knit button just above the Source pane. A window should pup up that says Homework 1, followed by your name and the date that you entered. If that didn’t happen, redo the above. Note that the figures appear in the margins of the text. Often, this is what we want, because otherwise the figures don’t line up well with where we are reading. However, if there is a large figure, then this will not work well. You would then add fig.fullwidth = TRUE as an option to your chunk like this.

``` {r fig.fullwidth = TRUE}

For the purposes of this primer, we are mostly assuming that you are typing up solutions to a homework. Add the following below the file you have already created and Knit again.

1. Consider the `DrinksWages` data set in the `HistData` package.

a. Which trade had the lowest wages?

```{r}

DrinksWages[which.min(DrinksWages$wage),]

```

We see that **factory worker** had the lowest wages; 12 shillings per week.

If there had been multiple professions with a weekly wage of 12 shillings per week,

then we would have only found one of them here.

b. Provide a histogram of the wages of the trades

```{r}

hist(DrinksWages$wage)

```

The weekly wages of trades in 1910 was remarkably symmetric. Values ranged from a

low of 12 shillings per week for factory workers to a high of 40 shillings per

week for rivetters and glassmakers. Notes

- putting a 1. at the beginning of a line will start a numbered list.

- Putting mtcars in single quotes will make the background shaded, so that it is clear that it is an R object of some sort. Putting ** before and after a word or phrase makes it bold.

- The part beginning with ```{r} and ending with ``` is called an R chunk. You can create a new R chunk by using Command + Alt + I. When you knit your file, R Markdown puts the commands that it used in a shaded box, to indicate that it is R code. Then, it also executes the R commands and shows the output.

- Text should not be inside of the R chunk. You should put your text either above or below the chunk.

- Every R chunk probably needs to have some sort of explanation in words. Just giving the R code is often not sufficient for someone to understand what you are doing. You will need to explain what it is you have done. (This can often be done quite quickly, but sometimes can take quite a bit of time.)

- If you are using a package inside R Markdown, you must load the package inside your markdown file! Werecommend loading all libraries at the top of the markdown file.

- You can include math via LaTeX commands. LaTeX is a very powerful word processor that produces high quality mathematical formulas. A couple of useful examples are $x^2$, which is \(x^2\), or $X_i$, which is \(X_i\).

In order to finish typing up the solutions to Homework 1, you would continue with your list of problems, and insert R chunks with the R code that you need plus some explanation in words for each problem.

When you are done, you should Knit the document again, and a new .html or .pdf will appear. The last thing we would say is that Markdown has a lot more typesetting that it can do than what we have indicated up to this point. There are many more good resources on this, for example Grolemund and Wickham, Ismay, or you can look at the tufte template in RStudio by clicking on File -> New File -> R Markdown -> From Template -> Tufte Handout.

Exercises

-

Let \(X\) be a discrete random variable with probability mass function given by \[ p(x) = \begin{cases} 1/4 & x = 0 \\ 1/2 & x = 1\\ 1/8 & x = 2\\ 1/8 & x = 3 \end{cases} \]

- Verify that \(p\) is a valid probability mass function.

- Find the mean of \(X\).

- Find \(P(X \ge 2)\).

- Find \(P(X \ge 2\ |\ X \ge 1)\).

-

Find the variance and standard deviation of the rv \(X\) from Exercise 1.

-

Roll two ordinary dice and let \(X\) be their sum. Draw the pmf for X. Compute the mean and standard deviation of \(X\).

-

Suppose you roll two ordinary dice. Calculate the expected value of their product.

-

Suppose that a hat contains slips of papers containing the numbers 1, 2 and 3. Two slips of paper are drawn without replacement. Calculate the expected value of the product of the numbers on the slips of paper.

-

Pick an integer from 0 to 999 with all possible numbers equally likely. What is the expected number of digits in your number?

-

Go to the Missouri lottery Pick 3 web page http://www.molottery.com/pick3/pick3.jsp and compute the expected value of these bets:

- $1 Front Pair

- $1 Back Pair

- $6 6-way combo

- $3 3-way combo

- $1 1-off

-

Suppose you take a 20 question multiple choice test, where each question has four choices. You guess randomly on each question. What is your expected score? What is the probability you get 10 or more questions correct?

-

Steph Curry is a 91% free throw shooter. If he shoots 10 free throws in a game, what is his expected number of shots made? What is the probability that he makes at least 8 free throws?

-

Suppose 27 people write their names down on slips of paper and put them in a hat. Each person then draws one name from the hat. Estimate the expected value and standard deviation of the number of people who draw their own name. (Assume no two people have the same name!)

-

Suppose that 55% of voters support Proposition A.

- You poll 200 voters. What is the expected number that support the measure?

- What is the margin of error for your poll (two standard deviations)?

- What is the probability that your poll claims that Proposition A will fail?

- How large a poll would you need to reduce your margin of error to 2%?

-

Plot the pdf and cdf of a uniform random variable on the interval \([0,1]\).

-

Compare the cdf and pdf of an exponential random variable with rate \(\lambda = 2\) with the cdf and pdf of an exponential rv with rate 1/2. (If you wish to read ahead in the section on plotting, you can learn how to put plots on the same axes, with different colors.)

-